Are we becoming cyborgs in 2024?

Many thanks to Elias, Palla, Mahima, Hannes, and Rafal for review. Separate thanks to João Montenegro for an awesome discussion.

Worldwide, the average person’s daily screen time is 6 hours and 58 minutes. This is approximately 41% of waking time. Does it mean that a modern human is 41% digital?

Let’s think.

- Personal relations are becoming digital (17% of marriages and 20% of relationships begin online) as well as business and consumer relations (81% of consumers prefer communicating in a messenger).

- Society is becoming “cashless”: only 9% of Americans pay with cash, and in some countries it accounts for only 1% (e.g. Norway, Honk Kong, Sweden, etc.).

- 71% of smartphone owners sleep with or next to their mobile phones.

- Google search engine acts almost like humanity's hive-mind.

- “Information and communications technologies are more widespread than electricity.” – Shoshana Zuboff.

One thing can be said for sure: our everyday life is a blend of the physical and digital worlds and this trend won’t reverse. Furthermore, the share of digital reality is going to grow.

Humanity has rich experience (approx. 300,000 years) living in physical reality.

Within this time we’ve learnt some useful skills. For example:

- How to build reliable constructions such as houses, bridges, roller coasters, and military offices.

- How to treat our bodies in such a way that they serve us as long as possible.

- How to build meaningful relations that last for years and make us feel good, loved, and needed.

With digital reality, our experience is approximately 4,878x shorter (assuming 1983 being the internet’s year of birth). So, it seems we are still at the beginning of the learning curve of living in digital reality.

What part of ourselves is digital? Let’s think.

- Most of our documents, files, and data (e.g. financial and health) are digital and stored on smartphones or laptops.

- Most of our communications and social interactions are digital: through emails, social media, video calls, etc.

- Most of our work is done digitally: collaborative work using Google services and GitHub, web services (e.g. Figma), storing and managing files in the cloud (e.g. Dropbox).

- Most of our memorable moments and things that make us feel good are stored digitally: pictures from birthdays and weddings, screenshotted messages from those we love and hate, playlists we’ve been collecting for years, movie ratings, etc.

- Authentications and passwords for all services and accounts we use.

What exactly does “digital” mean in all these cases? In most cases, it means stored in cloud services or on a company server. That is saying that 99.9% of our digital “us” is outside of our control.

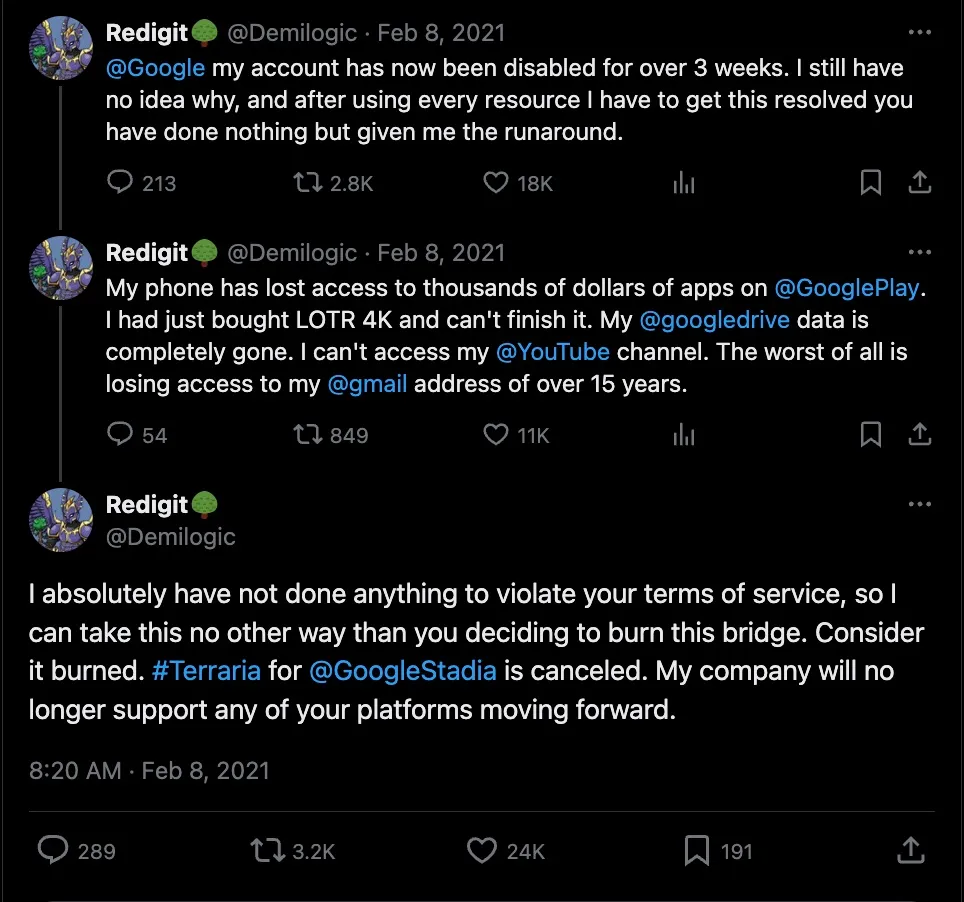

Google won’t close tomorrow, will it?

Humans like to be optimistic. Google won’t close tomorrow, right? It just doesn’t make any sense. Humanity pays it so much money. Humanity gives it all its data.

Okay, thinking rationally, let’s assume that Google’s best option is to exist forever. But what about our Google accounts? What about the digital “us”? What about data authenticity? What about the part of our life that happens digitally every day? Will it exist tomorrow?

Let’s for a second move from that optimistic perspective to a more realistic one:

- Whether we have access to our money or not depends on whether the bank allows us or not.

- Whether we can access our iPhone or not depends on whether Apple allows us or not.

- Whether we can access our data backup on iCloud depends on whether Apple allows us or not.

- Whether we can leave a comment on a forum or social media depends on whether we meet the criteria of being a “good user” or not.

So, the first “layer” is: in order to have access to our digital life, we need to meet the criteria of being a “good customer” every day. Every single day. Doesn’t sound nice, but not a big deal, right?

At the end of the day, we are good humans, we have nothing to hide, we trust our governments and these pretty multibillion corporations. They are nice guys. Is there anything else to worry about?

Hmm, let us think what happens if…

- Artificial general intelligence (AGI) kidnaps the internet and floods it with bots?

- Company servers are destroyed because of war or natural disasters?

- Governmental databases break down, destroying all information about citizens and pushing the world into anarchy?

- Autopilot transports and machinery get programmed for self-destruction on Wednesday 9:00 Eastern Time?

- Banking system gets lost or corrupted, so we lose all our money because it is just a digital entry on a centralized server?

We still can stick to an optimistic mindset and say: okay, the probability of this happening is very low. Let’s wait until one such incident happens (e.g. nuclear war) and then we should start worrying. Today we still can access banking apps, scroll Instagram, and order a poke bowl. Why do we need to worry now?

There are several reasons to think about it today:

- We can’t think about saving the world after the world has been destroyed because the world won’t exist anymore.

- Saving the world might take time. A couple of seconds might not be enough. And if the world is going to be destroyed, no one will kindly tell us 20 years in advance.

- We still can eat poke while trying to save the world. There is no contradiction therein.

Now let’s be more precise. While appealing to “saving the world”, by “the world”, we mean “the digital world”, a part of our life and identity that exists purely online or in a hybrid state of offline and online (e.g. medical devices implanted into human bodies having software components). By “saving”, we mean making it robust. That is to say neither governments, corporations, AGI, nor bots can kidnap, destroy, control, or limit a digital part of us.

We must make the internet more robust

Comparing our physical reality to digital reality, if in the former we developed robust materials such as carbon, metal, glass, and ultra-high molecular weight polyethylene fiber, in the latter we are still building twig huts.

The good news is that today we are already equipped well enough to start making the internet more robust. Three components that will allow us to resist factors such as AGI or dictatorships are blockchains, privacy incorporated into blockchains, and cryptography. Why these three?

To have control of our digital “us”, we need four components:

- Permissionlessness

- Immutability

- Verifiability

- Privacy

Let’s abstract from the wording “the internet”. Instead, let’s use the word “cyberspace”, meaning “everything digital” including the internet, computer networks, and whatnot.

By permissionlessness, we mean that cyberspace is equal for any human on Earth. Create an account, deploy code, own a digital object, talk to another human in cyberspace – whatever is possible there for one human is possible for everyone.

By immutability, we mean that humans have control over their life in cyberspace and if they make an action in cyberspace (e.g. open an account, issue an ID, or deploy code), no one can undo it. One should note that immutability might work as a double-edged sword, as if being immutable means nothing can ever be forgotten. Thus, we should be very conscious while building of what exactly we are making immutable and how it might manifest as a negative externality one day.

By verifiability, we mean something like an objective source of truth. If human 54835934 tells human 82849934 that “this statement” is true, human 82849934 has a tool to objectively verify its truthfulness.

By privacy, we mean control over what is disclosed, when, and to whom. How sincere are we claiming “we have nothing to hide”? Even if we don’t mind “someone” having access to all our correspondence, medical data, pictures and videos (including those hot nudes), food delivery, e-commerce, and porn browser history… What if we take it one step further, right to brain-computer interfaces (BCI)? If someone (whether governments, corporations, AGI or another party with exceptionally good intentions) now has access to all our thoughts and body chemistry, do we still have nothing to hide?

These four properties allow us to ensure that our digital “us” will last at least as long as our physical “us” (or maybe even longer, but that is a topic for another article).

Blockchains and cryptography

No technology in isolation is a magic pill. However, combining the right technologies and applying them in the right cases allow us to come closer to inventing a magic pill.

Let’s first clarify what technologies we have in our “survival kit” and what properties of theirs we are interested in.

Blockchain provides permissionlessness and immutability

Blockchain is a shared, immutable ledger that facilitates the process of recording transactions and tracking assets in a network.

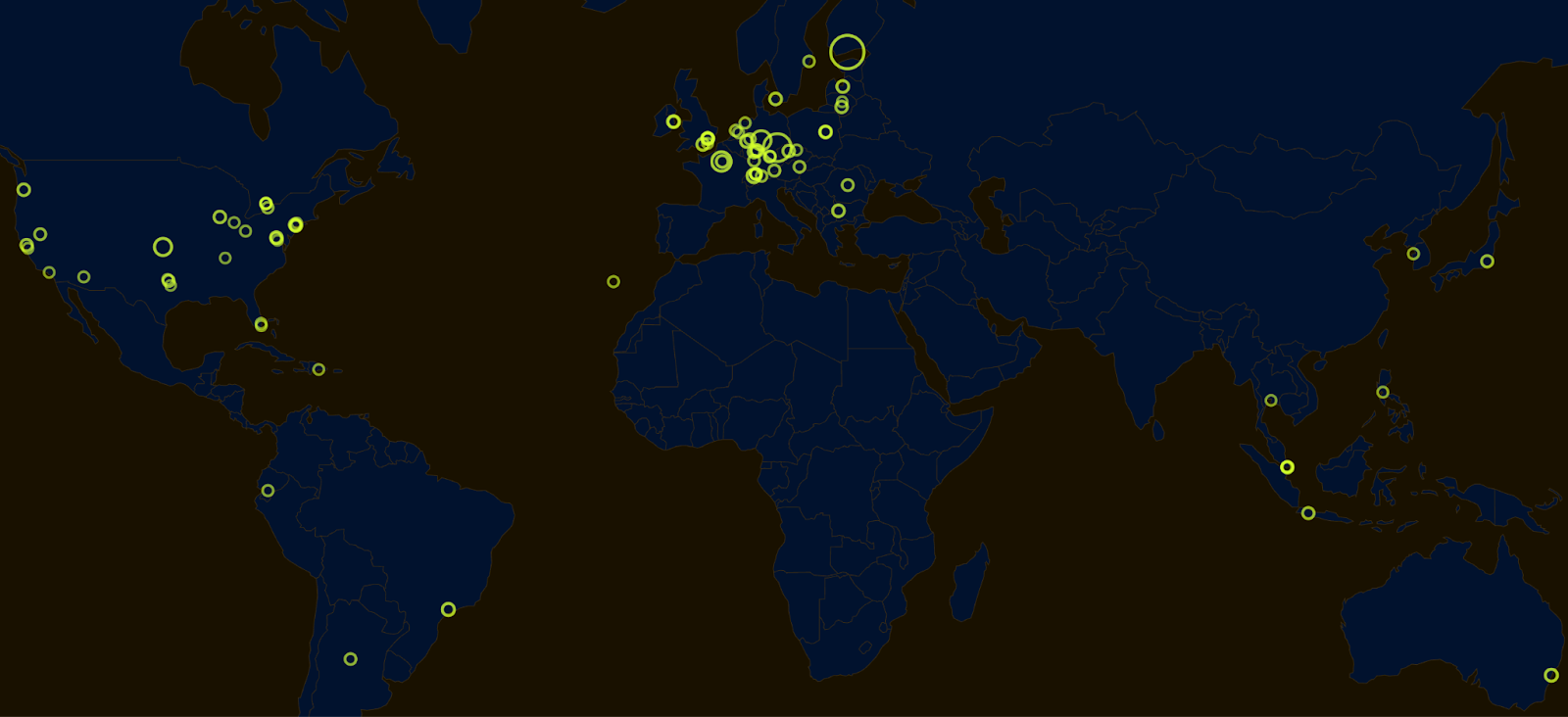

In this definition, the key property is “shared”. The question is how exactly it is shared or who are the participants of the network. To be shared “for real”, we want the blockchain to have thousands or even millions of nodes that are independent of each other. As of the 15th of April 2024, Ethereum has 5,912 nodes in the network where by nodes we mean different independent parties running infrastructure (one node might run many validators). And even though some of them are run by institutions, Ethereum can be fairly called a shared and immutable ledger. That is to say, Ethereum is good enough to be the base of the robust internet.

Cryptography provides verifiability

Cryptography’s history is almost as long as humanity’s history. But with the advent of the digital era, some specific kinds of cryptography that fit the needs of computers and the internet started evolving at an insane pace.

The history of zero-knowledge cryptography started in 1989, although it wasn’t obvious it would be a perfect complement for blockchains (even though the idea of blockchains was proposed earlier).

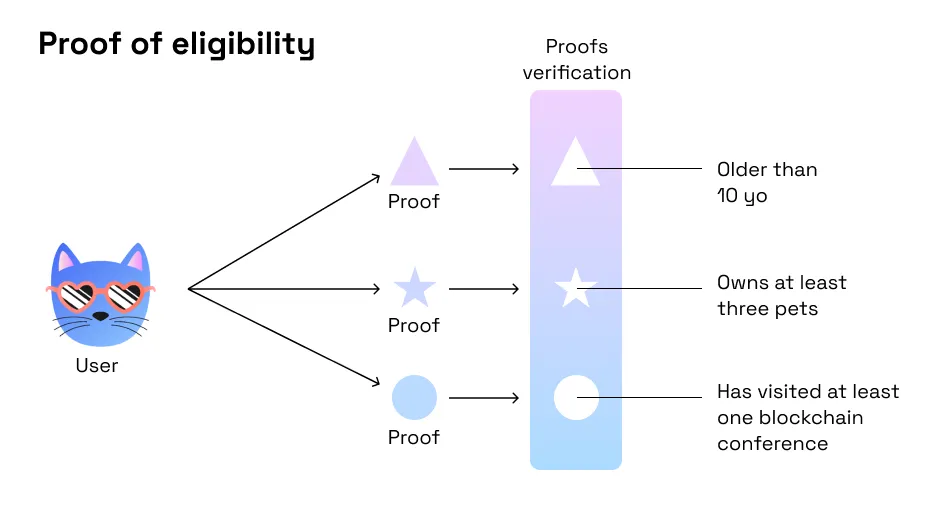

A zero-knowledge protocol consists of two parties, a Prover and a Verifier, where the Prover can prove to the Verifier that a certain huge statement is true while conveying only a tiny bit of information. This property is called “succinctness” and is a key component of cyberspace, where individuals need to coordinate with each other all the time and the communication has to be as lightweight as possible.

Privacy-preserving blockchains provide privacy

Privacy-preserving blockchains allow individuals to choose what information to disclose, when, to whom, and in what form. This is another crucial element of cyberspace, as disclosing one bit of data while preserving private other bits of data is a must-have mechanism for efficient coordination.

For example, in the case of elections, an individual wants to tell the tally that (i) they are eligible to vote, (ii) they have voted, (iii) they voted only once, but they don’t want to disclose any details about their vote or their identity.

One should note that privacy on the application layer (e.g. private identity or transfers) can’t be added to a transparent blockchain purely by means of cryptography. Privacy should be incorporated into blockchain design at the level of smart contract anatomy and state management.

Now that we’ve looked at what components we need to build robust cyberspace, let’s explore specific cases we need to fix and decide if we really can fix them with these tools.

47 use cases and 75 examples for blockchains, privacy, and zero-knowledge

In the second part of this article, we will explore 47 use cases and 75 examples of how blockchains, privacy, and verifiability can disrupt, heal, expand, and modify the world around us, addressing its problems, weaknesses, points of failure, and fragilities.

But before we dive into the full list, let’s cover three quick examples as an introductory illustration:

- Programmable identity: Today, when someone needs to confirm their identity, age, citizenship, or residential address, they provide a passport, ID, or other formal documents. With zero-knowledge proofs, one can manage all their documents client-side and provide proofs of specific properties upon request, without requiring documents.

One should note that for this use case we strictly need privacy-preserving composable blockchain (e.g. Aztec).

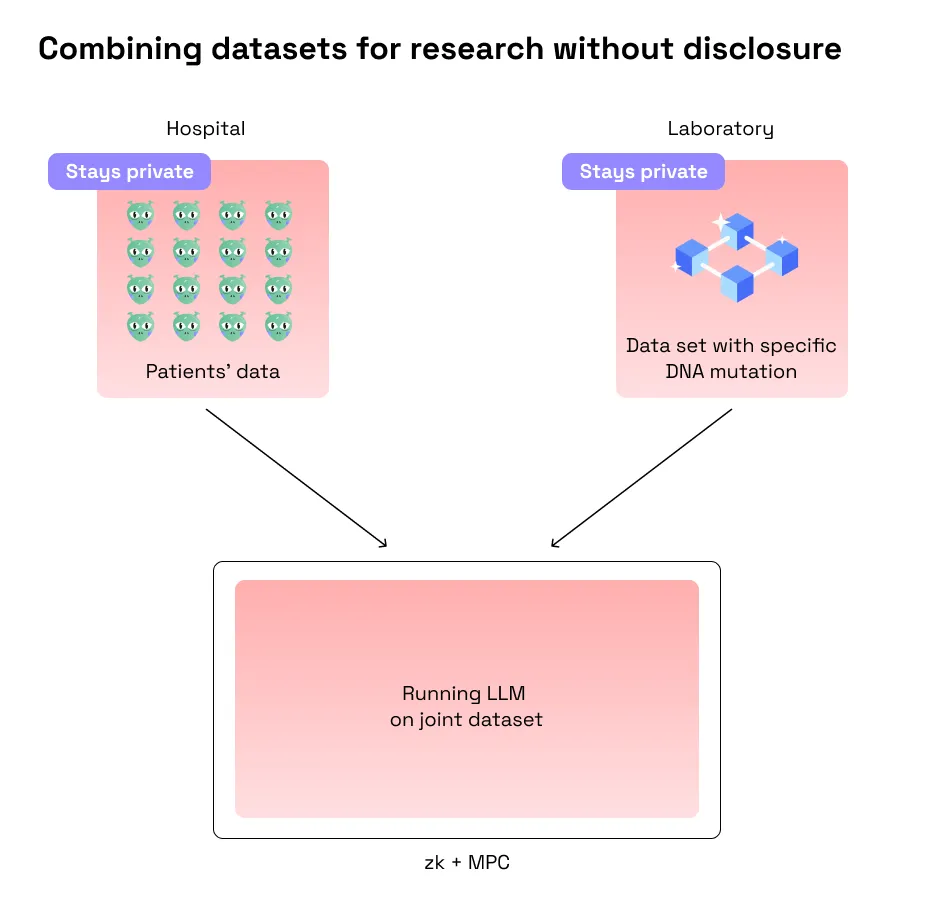

- Combining several data sets and a model without datasets and model disclosure between parties.

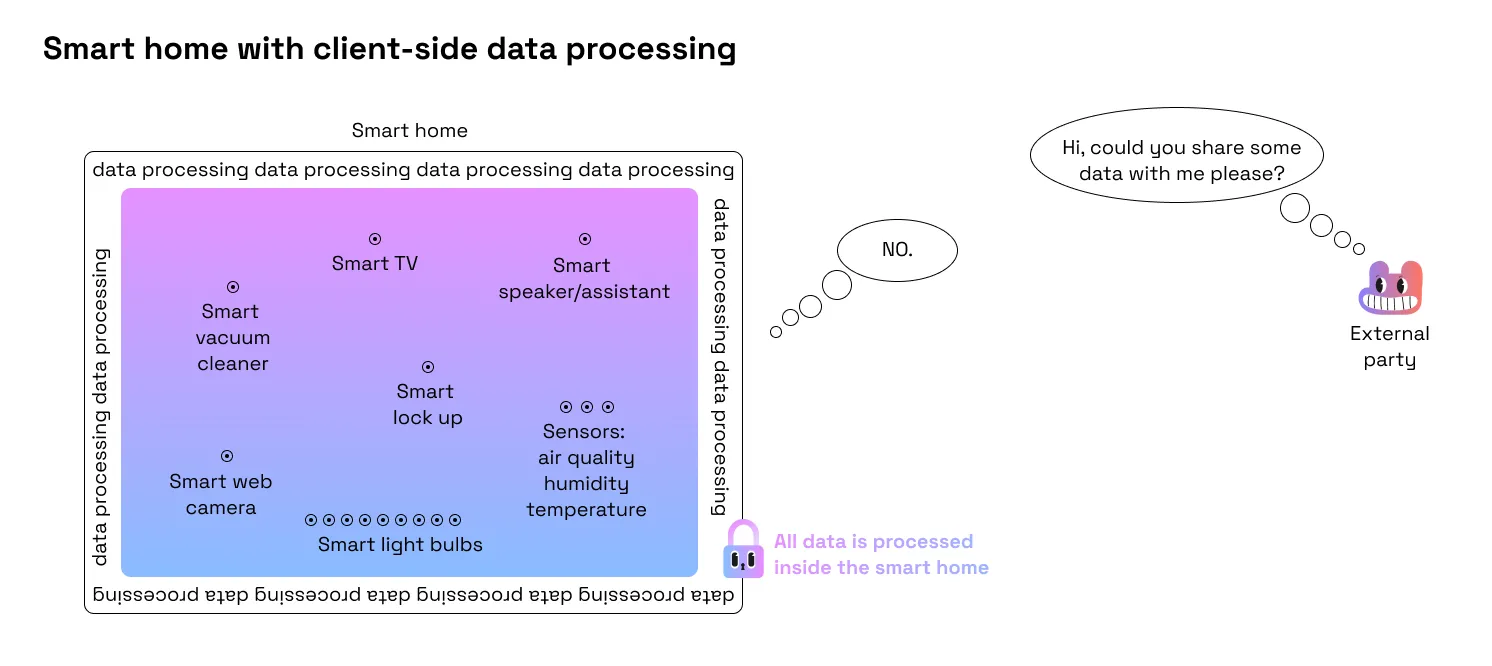

- Smart home that processes all data client-side (i.e. inside of smart home) without sharing its inhabitants’ data with anyone (even Google!).

Now we have some intuition behind how zero-knowledge proofs and privacy-preserving blockchains can create an alternative to the current reality, with several of the cases we’ve mentioned involving fixing data privacy and individual sovereignty issues and unlocking new forms of collaboration and coordination. Finally we are ready to dive into the longlist of blockchain, privacy, and verifiability use cases, where these three factors become the real game changers for the world and humanity’s future.

Most of the ideas described below were borrowed from brilliant minds, either from their public presentations or personal talks. Credit to Barry, Steven, Zac, freeatnet.eth, Jessica, Henry, Tarun, and Joe for being brilliant minds.

We will also categorize all use cases into categories:

- Efficient coordination – allows small numbers of individuals coordinating efficiently to compete with much larger agents.

- Verifiable computation – “truth objectivity” allows individuals to be sure that something is true without relying on honesty of institutions or other individuals.

- Data immutability and robustness – makes the cyberspace environment more long-lasting, reliable, and independent of institutions and individual agents.

- Improved world economic efficiency – allows individuals and institutions to utilize the resources at their disposal in a more economically efficient manner.

- Privacy – just privacy.

In the table below, we categorize a number of solutions to specific problems we will probably meet within the next decades while the digital share of the world is expanding and rooting deeper and deeper into our reality. We classify them according to whether they require blockchain, privacy, verifiability (provided by zero-knowledge), or a specific combination of these three. In most cases, blockchain serves as a coordination layer, privacy is engaged in all cases where participants can’t go with 100% transparency of all data, and zero-knowledge adds verifiability.

Under blockchain, we assume a decentralized one where nodes are run by thousands of diverse, independent parties. This decentralization makes the network credibly neutral and provides strong security guarantees. Today, Ethereum fits this criteria more than anything else. Layers 2 on top of Ethereum inherit its security property, however, their credible neutrality depends on design details such as approach to block building and upgrade mechanism.

Under privacy, we mean that whatever we want to stay private should be fully processed client-side on the user's device and should not be exposed to any other parties.

We also consider combining blockchain, privacy, and zero-knowledge with other existing technologies such as:

- MPC and 2PC – enables multiple parties (or two parties in 2PC case) – each holding their own private/secret data – to compute the value of a public function using that private data while keeping their own piece of data secret.

- TEE – a secure area of a main processor that prevents unauthorized entities from outside the TEE from reading data, and prevents code in the TEE from being replaced or modified by unauthorized entities.

- Any existing cryptographic primitive such as an ECDSA signature (an example of a public key cryptography encryption algorithm).

Why can't we use these technologies separately and why do we need to combine them with zero knowledge and blockchain? Because we don’t just want to trust those using them that they are acting in good faith; we want to be able to verify that they are acting in good faith.

Efficient coordination

Verifiable computations / truth objectivity

Data immutability and robustness

Efficiency

To glimpse how all these use cases are possible through combining zero-knowledge and privacy-preserving blockchains, one should note that even though these technologies are absolutely awesome, they are still not magic pills. We need developers, engineers, and architects to combine them in smart ways. That is to say that the real magic pills are the developers, engineers, and architects capable of doing this and caring about our common future as much as the Aztec team does.

The components (i.e. blockchain, privacy, and verifiability) have been clear at a theoretical level for years. But even when they started to be implemented, most implementations were not really feasible for real-world usage and were hard to combine with each other. This was a serious issue, as web3 applications should be competitive with the existing web2 stuff we already use.

Aztec Labs addressed this issue and has been developing Noir, an open-source, domain-specific language that makes it easy for developers to write programs with arbitrary programmable logic, zero-knowledge proofs, and composable privacy. The program logic can vary from simple “I know X such that X < Y” to complex RSA signature verification. If you’re curious about Noir, check the talk “Learn Noir in an afternoon” by José Pedro Sousa.

Privacy-preserving blockchain and zero-knowledge in spacetech and defense

For those who are still skeptical about these 47 use cases above as too cypherpunk-ish, the next section talks about the need for privacy-preserving blockchains and zero-knowledge cryptography in a very specific domain for solving very specific problems.

Blockchains and zero-knowledge in space

Today, operating in near-Earth space is a part of everyday life: countries, industries, and businesses rely on the day-to-day operation of satellites and the complex infrastructure created in Earth’s orbit. That is to say, a number of parties (some of them are obviously hostile towards each other) manage a number of programmable “nodes” with sensitive data. That sounds exactly like a coordination problem that privacy-preserving blockchains and zero-knowledge are able to handle!

- Case 1: satellite coordination

Most large countries have their satellites flying in space for some specific missions. That reminds one of car or plane traffic, but instead there are a number of satellites going from point A to point B. The goal is to manage this traffic in a way that there are no collisions or accidents. The coordination problem is that space is everyone’s – that is, the model of plane traffic doesn’t work.

Instead, satellite owners have to coordinate with each other (and as a consequence trust each other) to negotiate space management. This requires pretty deep data disclosure and trust that other parties have good intentions.

We can utilize zero-knowledge to provide proof of correct code execution, meaning that the satellite will perform exactly what its owner promises. For example, it can generate “proof of route”, which is a crucial component of space coordination as one additional zero in the code can send the satellite spinning forever or force it to change its trajectory and crash into other satellites. Privacy-preserving blockchain can be used as a coordination layer to deploy protocols for specific use cases.

- Case 2: operating over hostile areas

Sometimes satellites need to fly over the area of the countries with whom they are “not friends”. While flying over these areas, enemy representatives might be interested in hacking the satellite, intervening in its operating activity, and data forgery. For example, providing AI-generated images instead of authentic ones. To mitigate this risk, we can use zero-knowledge to provide proof of data authenticity or proof of metadata. - Case 3: planetary defense

Planetary defense is the effort to monitor and protect Earth from asteroids, comets, and other objects in space. Life on Earth has been drastically altered by asteroid impacts before. For example, once a planet-shaking strike led to the extinction of the non-avian dinosaurs.

Planetary defense combines comet and asteroid detection, trajectory assessment, tracking over time, and developing tools for possible collision prevention (includes slamming a spacecraft into the target, pulling it using gravity, and nuclear explosions).

Planetary defense is operated by NASA, the European Space Agency, and other organizations, all representing different countries and dealing with sensitive data collection and secret technologies (e.g. satellite engineering mechanisms).

ZKPs and privacy-preserving blockchains can be a coordination layer to process data collected by different parties without exposing it to others.

Blockchains and zero-knowledge for LLMs on battlefields, enterprise, and everyone’s daily lives

LLMs are (today, already, obviously) very valuable, are shaping an absolutely different economy, and will have a huge impact on daily humans’ lives, geopolitical balance, and enterprise operations.

One of the issues with LLMs is that they can’t tell you how they reached their conclusions. However, some of these conclusions change the world and impact millions, if not billions, of people, being used for business, for countries, for battlefields. Take for example the case of using LLMs to detect targets on a battlefield. The model tells the commander “This is the target”, but it doesn’t provide any proof that this target was detected correctly. In this case, the cost of wrong detection is at least a life, maybe a dozen lives, or several hundred thousand lives.

Zero-knowledge proofs can be a “neutral arbiter” providing the proof of what data the model was trained on, what data the model used to make a conclusion, how this data was put together, what the underlying algorithm is, etc. Furthermore, it can be done without revealing any specific information about either the data or the model.

One should note that today we are not talking about whether or not we should use LLMs for specific use cases. The reality is they are already used everywhere, by all major corporations, countries, and their governments. But what we still are able to do while LLMs start flooding our world is make the model owners accountable for their models – that is to say enforce some formal LLM compliance.

Similar to private data processing and collection today, some legislation for AI regulation will be set up as well. However, mere legislation is not enough; standards should be transparent and equal for everyone, and they should be followed.

Can a trusted third party who is believable, shares pro-democratic values, and is neutral enforce legislation compliance? In a domain such as AI, where at stakes are at the very least huge amounts of money (if not shaping the geopolitical landscape of the world for years to come), there are no neutral parties – everyone has their own interests and skin in the game.

In LLMs we don’t have custodial relations to the data, we can’t prove how the decision was made. But at the same time, we need to know how the data was put together and be able to question it. That is, for example, an absolute necessity for the presumption of innocence. ZKPs as a source of truth and privacy-preserving blockchains as a coordination layer can solve this issue to enforce AI standards compliance.

Conclusion

We are just at the very beginning. Privacy-preserving blockchains and zero-knowledge cryptography are needed in spacetech, agrotech, medtech, biotech, AI/ML, military technology, social networks, retail, robotics, big data, IoT, media and entertainment, edtech, fintech, logistics, neurotech, etc.

The 47 use cases mentioned above are kind of obvious today, but the real landscape of ZKPs and blockchain usage in 20 years will be much wider, deeper, and more diverse. Some of them can be predicted today, while some of them are almost impossible to imagine (unless you’re a true visionary).

One thing is absolutely clear, however: world verifiability is an absolutely required property while our world merges offline and online universes deeper and deeper. ZKPs as a “source of truth” and privacy-preserving blockchains as a coordination layer are a very promising duo to make the world verifiable.

It will take us time. The right moment to start was yesterday. But today is also a good day: for those ready to act together with Aztec – fill in the form.

Sources: