Many thanks to Cooper, Prasad, Rafal, Mahima, and Elias for the review.

Contents

- What do we mean by “decentralization”?

- Why Aztec takes decentralization seriously

- Aztec’s efforts around protocol decentralization: sequencer, prover, and upgrade mechanismsome text

- Request for proposal (RFP)

- Sequencer

- Prover

- Conclusion

1) What do we mean by “decentralization”?

Decentralization in blockchain is one of the most speculative topics, often thought about as a meme.

The questions around decentralization are:

- Which components should be decentralized (both at the blockchain and application layers)?

- To what extent?

- Is decentralization of a specific part of the network a must-have or just a nice-to-have?

- Will we die if a specific part of the network is not decentralized?

- Is it enough to have a decentralization roadmap or should we decentralize for real?

- …

The goal of this article is to shed some light on what we mean by decentralization, why it matters, and how decentralization is defined and provided in the context of the Aztec network.

Before we start figuring out the true meaning of decentralization, we should note that decentralization is not a final goal in itself. Instead, it is a way to provide rollups with a number of desired properties, including:

- Permissionlessness (censorship resistance as a consequence) – anyone can submit a transaction and the rollup will process it (i.e. the rollup doesn’t have an opinion on which transactions are good and which are bad and can’t censor them).

- Liveness – chain operates (processes transactions) nonstop irrespective of what is happening.

- Security – the correctness of state transition (i.e. transactions' correct execution) is guaranteed by something robust and reliable (e.g. zero-knowledge proofs).

In the case of zk-rollups, these properties are tightly connected with “entities” that operate the rollup, including:

- Sequencer – orders and executes transactions –> impacts permissionlessness and liveness.

- Prover – generates proof that the transactions were executed correctly –> impacts security and liveness.

- Governance mechanism (upgrade mechanism) – manages and implements protocol upgrades –> impacts security, liveness, and permissonlessness.

Even though we said at the beginning of the article that decentralization is a speculative topic, it’s not overly speculative. Decentralization is required for permissionlessness, censorship resistance, and liveness, which are required to reach the system’s end goal, credible neutrality (at least to some extent). “Credible neutrality” means the protocol is not “designed to favor specific people or outcomes over others” and is “able to convince a large and diverse group of people that the mechanism at least makes that basic effort to be fair”.

Credible neutrality is a crucial element for rollups as well, which is why we're prioritizing decentralization at Aztec, among other things. Progressive decentralization is not an option; Decentralization from the start is a must-have as the regulatory, political, and legal landscapes are constantly changing.

In the next section, we will dive into the specifics of Aztec’s case, looking at its components and their levels of decentralization.

2) Why Aztec takes decentralization seriously

Aztec network is a privacy-first L2 on Ethereum. Its goal is to allow developers to build dapps that are privacy-preserving and compliant (in any desired jurisdiction!) at the same time.

For Aztec, there are two levels of decentralization: protocol and organizational.

At the protocol level, Aztec network consists of a number of components, such as the P2P transaction pool (i.e. mempool), sequencer, prover, upgrade mechanism, economics, Noir (DSL language), execution layer, cryptography, web SDK, rollup contract, and more.

For each of these, decentralization might have a slightly different meaning. But the root reason why we care about it is to provide safety for the developers and users ecosystem.

- For the rollup contract and upgrade mechanism, the question is who controls the upgrades and how we can diversify this process in terms of quantity and geography.

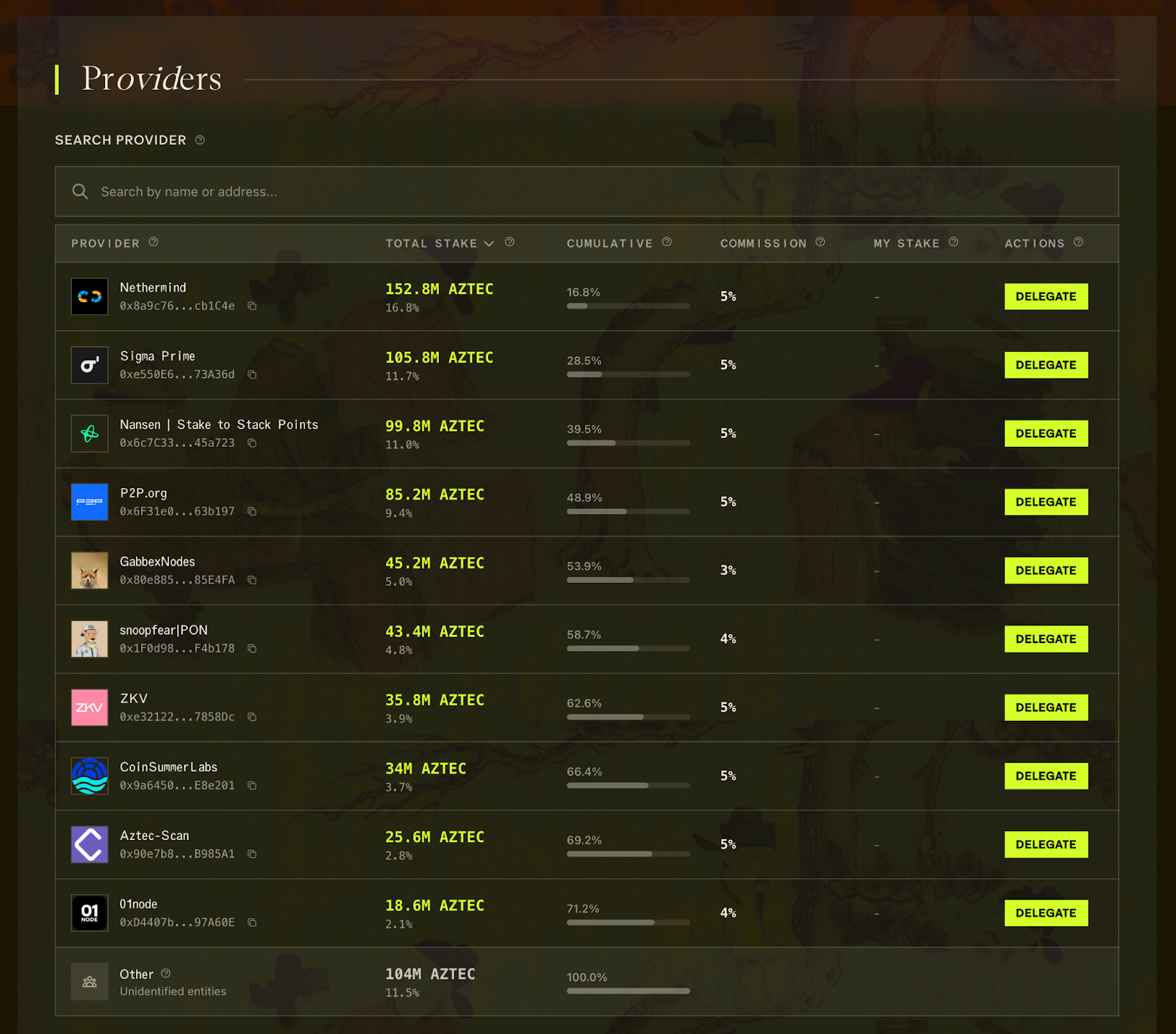

A good mechanism should defend the protocol from forced upgrades (e.g. by the court). It should also mitigate the sanctions risk, isolating this risk at the application level, not the rollup level. - For sequencer and prover, we also need quantity and geographical decentralization as well as a multi-client approach where users can choose a vendor from a distributed set that may have various different priorities.

- For economic decentralization, we need to ensure that “the ongoing balancing of incentives among the stakeholders — developers, contributors, and consumers — will drive further contributions of value to the overall system”. It covers the vesting of power, control, and ownership with system stakeholders in such a way that the value of the ecosystem as a whole accrues to a broader array of participants.

- For all software components, such as client software, we need to ensure that copies of the software are distributed widely enough within the community to ensure access, even if the original maintainers choose to abandon the projects.

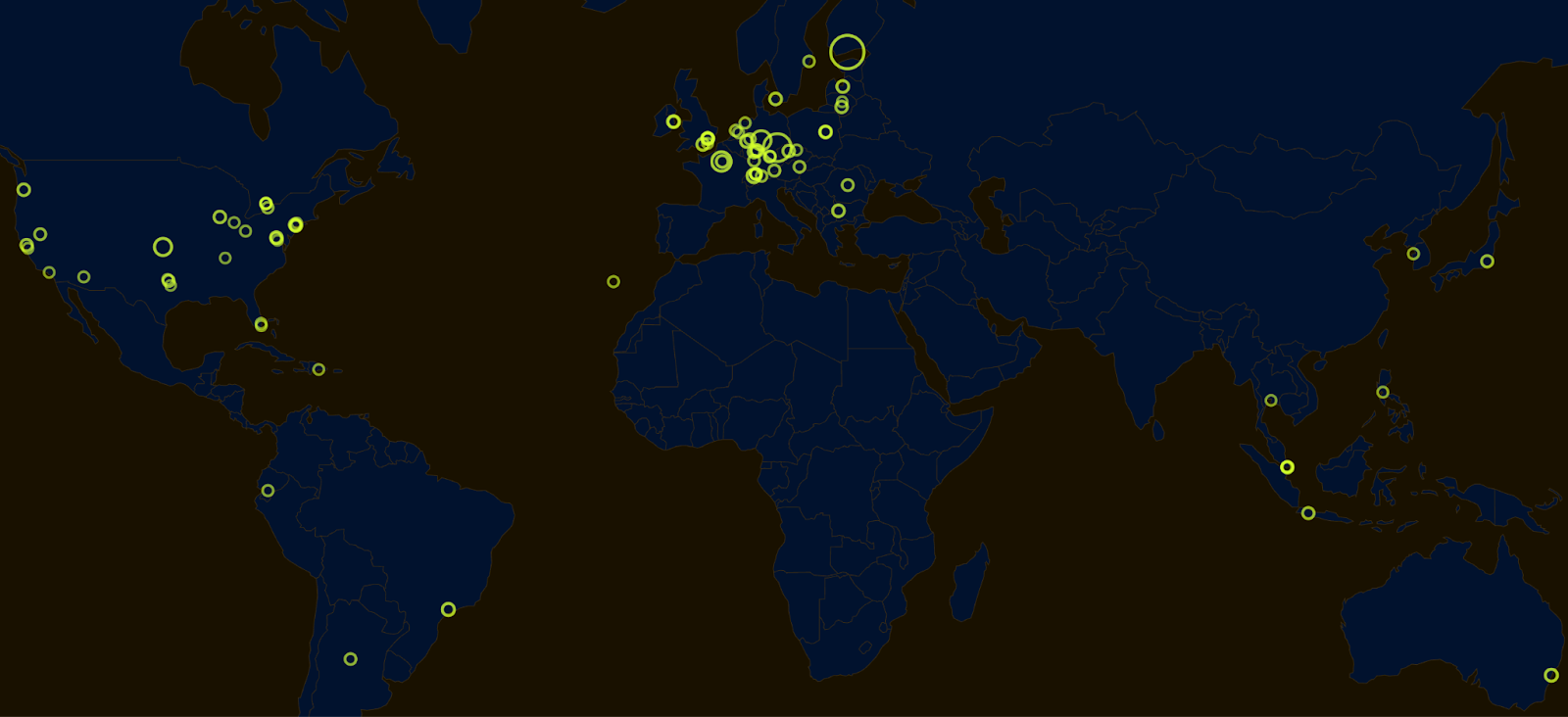

When it comes to long-term economic decentralization, the desired outcome is power decentralization, which in turn can be achieved through geographical decentralization.

In the context of geographical decentralization, we particularly care that:

- diversification among different jurisdictions mitigates the risk of local regulatory regimes attempting to impose their will.

- when reasoning about extremes and black swan events, having a global system is attractive from the point of view of safety and availability.

- intuitively, a system that privileges certain geographies cannot be considered neutral and fair.

For more thoughts on geographical decentralization, check out the article “Decentralized crypto needs you: to be a geographical decentralization maxi” by Phil Daian.

3) Aztec’s efforts around protocol decentralization: sequencer, prover, and upgrade mechanism

The decentralization to-do list is pretty huge. Decentralization mechanism design is a complex process that takes time, which is why Aztec started working on it far in advance and called on the most brilliant minds to collaborate, cooperate, design, and produce the necessary mechanisms that will allow the Aztec network to be credibly neutral from day one.

Request for proposal (RFP)

Since last summer, we’ve announced a number of requests for proposal (RFPs) to invite the power of community and the greatest minds in the industry to find a range of solutions for the Aztec network protocol design:

Everyone was welcome to craft a proposal and post it on the forum. For each of the RFPs, we outlined a number of protocol requirements that will decentralize and diversify each part of Aztec, making it robust and credibly neutral.

For each RFP, we got a number of proposals (all of them are attached in the RFPs’ comments). Proposals were discussed on the forum by the community and analyzed in detail by partners (e.g. Block Science) and the Aztec Labs team.

In this section, we will describe and briefly discuss the chosen proposals.

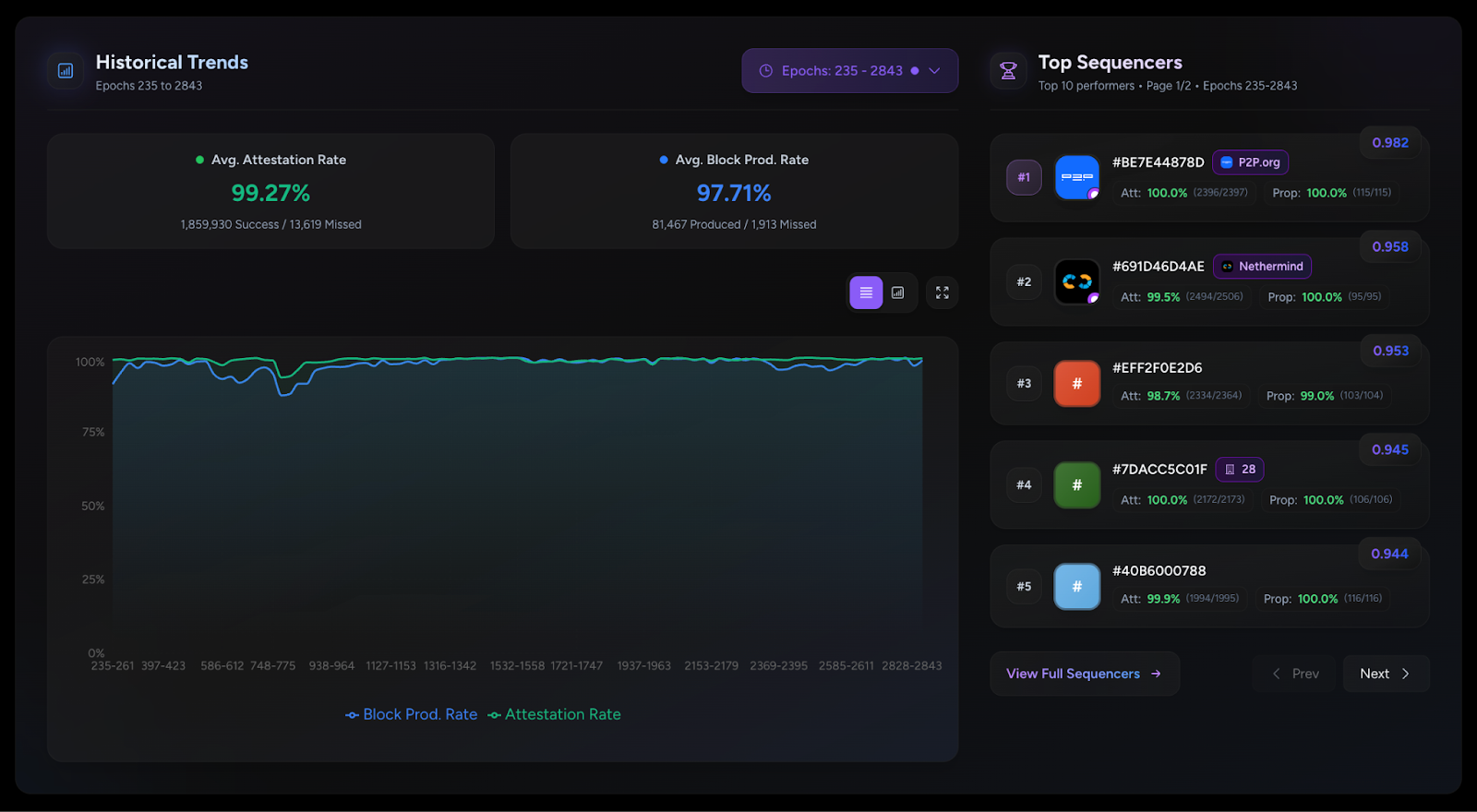

Sequencer Selection

Some of the desired properties

There are a number of desired properties assigned to the sequencer. These include:

- Permissionlessness sequencer role – any actor who adheres to the protocol can fill the role of sequencer.

- Elegant reorg recovery – the protocol has affordance for recovering its state after an Ethereum reorg.

- Denial of services – an actor cannot prevent other actors from using the system.

- L2 chain halt – there is a healing mechanism in case of block proposal process failure.

- Censorship resistance – it’s infeasibly expensive to maintain sufficient control of the sequencer role to discriminate on transactions.

Other factors to be considered are

- How the protocol handles MEV

- How costly it is to form a cartel

- Protocol complexity

- Coordination overhead – how costly it is to coordinate a new round of sequencers

Sequencer mechanism

The chosen sequencer design is called “Fernet” and was suggested by Santiago Palladino (“Palla”), one of the talented engineers at Aztec Labs. Its core component is randomized sequencer selection. To be eligible for selection, sequencers need to stake assets on L1. After staking, a sequencer needs to wait for an activation period of a number of L1 blocks before they can start proposing new blocks. The waiting period guards the protocol against malicious governance attacks.

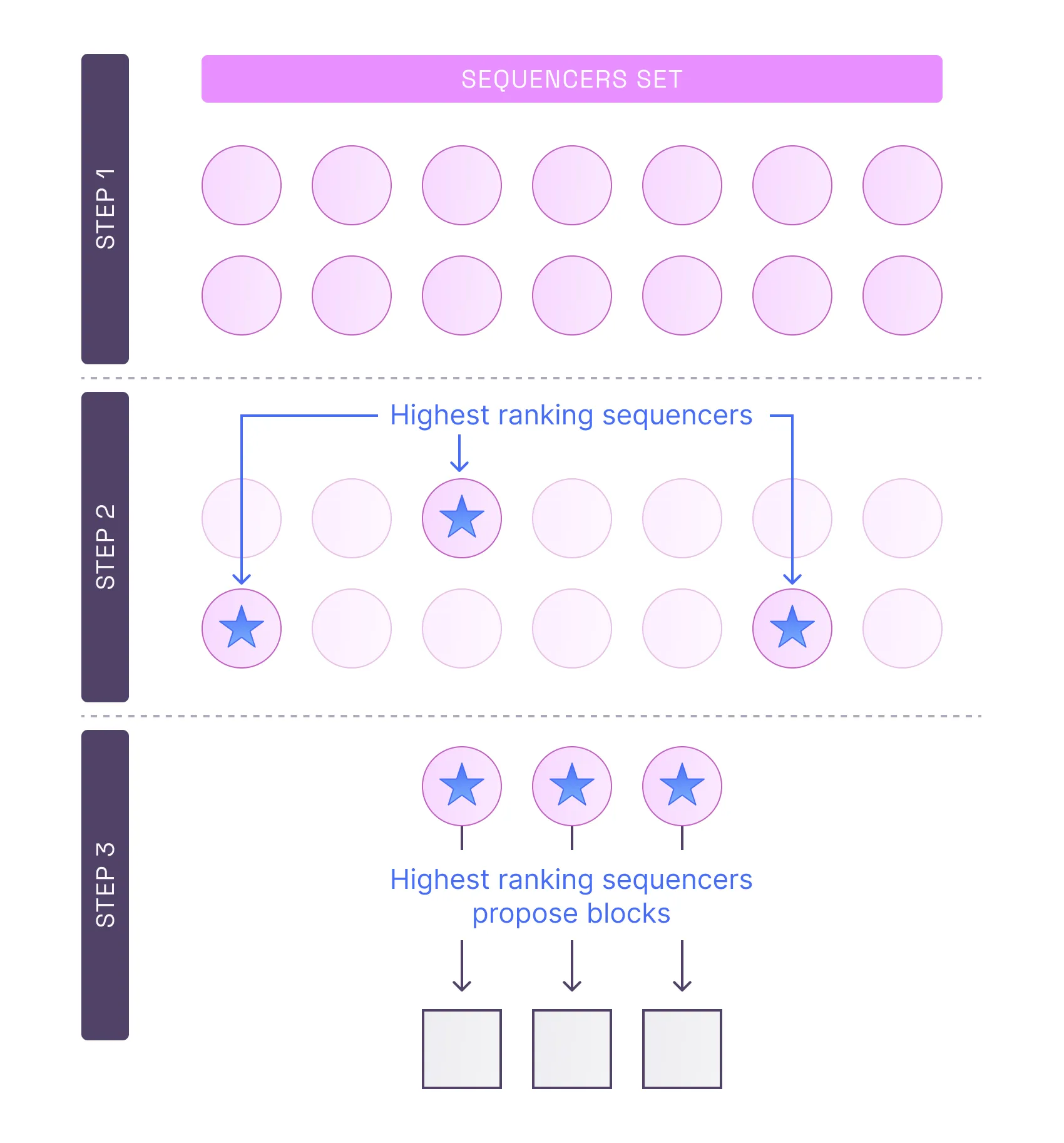

Stage 0: Score calculation

- In each round (currently expected to be ~12-36ss), staked sequencers calculate their round-based score, derived from a hash over RANDAO and a public key.

Stage 1: Proposal

- Based on the calculated scores, if a sequencer determines its score for a given round as likely to win, it commits to a block proposal.

- During the proposal stage, the highest ranking proposers (i.e. sequencers) submit L1 transactions, including a commitment to the Layer-2 transaction ordering in the proposed block, the previous block being built upon, and any additional metadata required by the protocol.

Stage 2: Prover commitment – estimated ~3-5 Ethereum blocks

- The highest ranking proposers (i.e. sequencers) make an off-chain deal with provers. This might be a vertical integration (i.e. a sequencer runs a prover), business deal with a specific 3rd party prover, or a prover-boost auction between all of the third party proving marketplaces.

On the sequencers' side, this approach allows them to generate proofs according to their needs. On the network side, it benefits from modularity, enjoying all proving systems innovations. - Provers build proofs for blocks with the highest scores.

- This stage will be explicitly defined in the next section dedicated to the proving mechanism.

Stage 3: Reveal

- At the end of the proposal phase, the sequencer with the highest ranking block proposal on L1 becomes the leader for this cycle, and reveals the block content, i.e. uploads the block contents to either L1 or a verifiable DA layer.

- As stages 0 and 1 are effectively multi-leader protocols, there is a very high probability that someone will submit a proposal (though it might not be among the leaders according to the score).

In the event that no one submits a valid block proposal, we introduce a “backup” mode, which enables a first-come, first-served race to submit the first proof to the L1 smart contracts. There is also a similar backup mode in the event that there is a valid proposal, but no valid prover commitment (deposit) by the end of the prover commitment phase or should the block not get finalized. - If the leading sequencer posts invalid data during the reveal phase, the sequencer for the next block will build from the previous one.

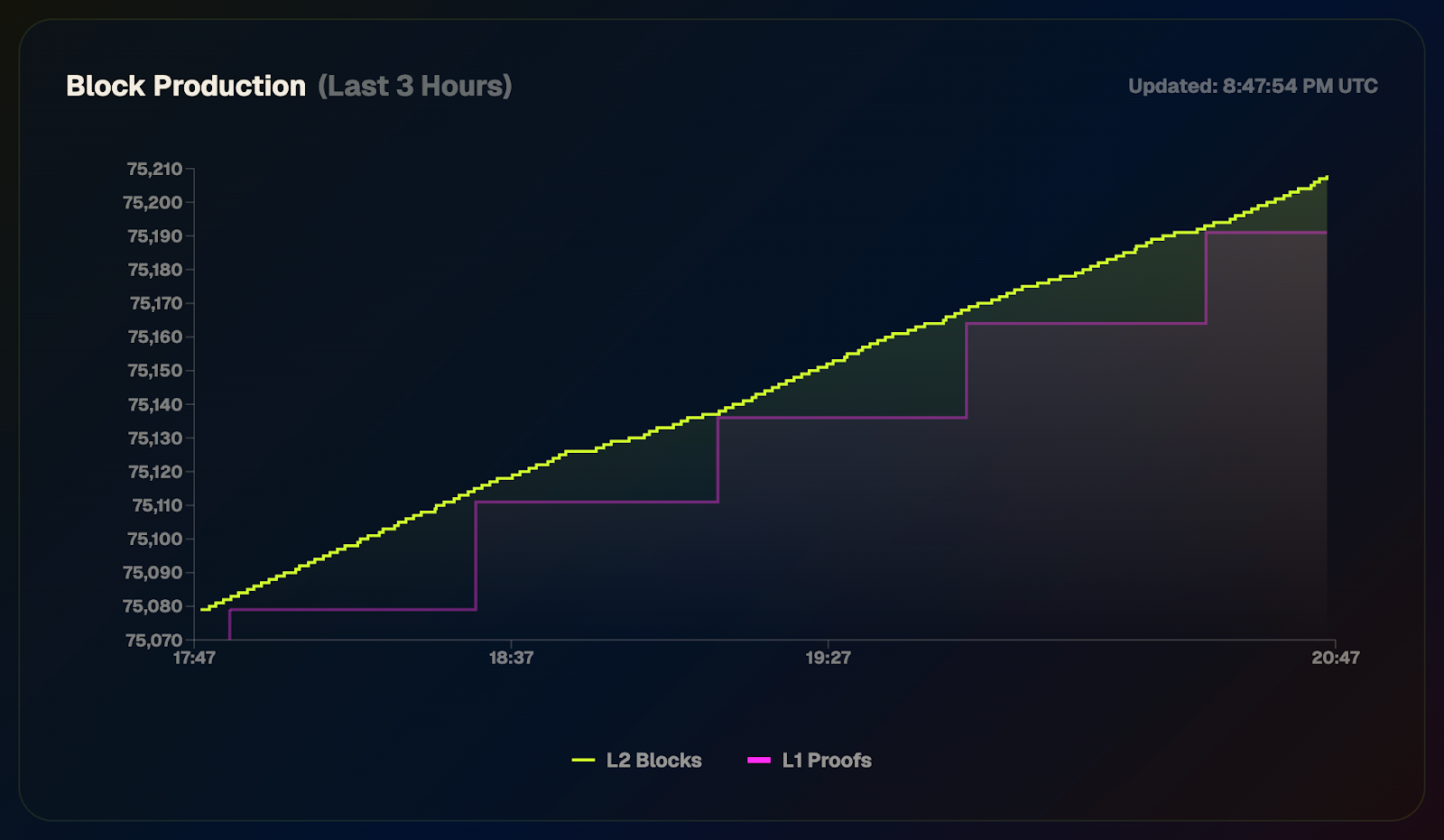

Stage 4: Proving – estimated ~40 Ethereum blocks

- Before the end of this phase, it is expected for the block proof to be published to L1 for verification.

- Once the proof for the highest ranking block is submitted to L1 and verified, the block becomes final, assuming its parent block in the chain is also final.

- This would trigger new tokens to be minted, and payouts to the sequencer, prover commitment address, and the address that submitted the proofs.

- If block N is committed to but doesn't get proven, its prover deposit is slashed.

The cycle for block N+1 can start at the end of the block N reveal phase.

How Fernet meets required properties

For a detailed analysis of the protocol's ability to satisfy the design requirements, check this report crafted by an independent third party, Block Science.

Prover

Context

In the previous section, we mentioned that at stage 3 proofs are supplied to the blocks. However, we didn’t explicitly define the specific prover mechanism.

To design a prover mechanism, Aztec also initialized an RFP after the sequencer mechanism was chosen to be Fernet (as described in the previous section).

Without going into too much detail, one should note that the Aztec network has two types of proofs: client-side proofs and rollup-side proofs. Client-side proofs are generated for each private function and submitted to the Aztec network by the user. The client-side proving mechanism doesn’t have any decentralization requirements, as all the private data is processed solely on the user’s device, meaning it’s inherently decentralized. Covering client-side proof generation is outside the scope of this piece, but check out one of our previous articles to learn more about it.

The Aztec RFP “Decentralized Prover Coordination” asked for a rollup-side prover mechanism, the goal of which is to generate proofs for blocks.

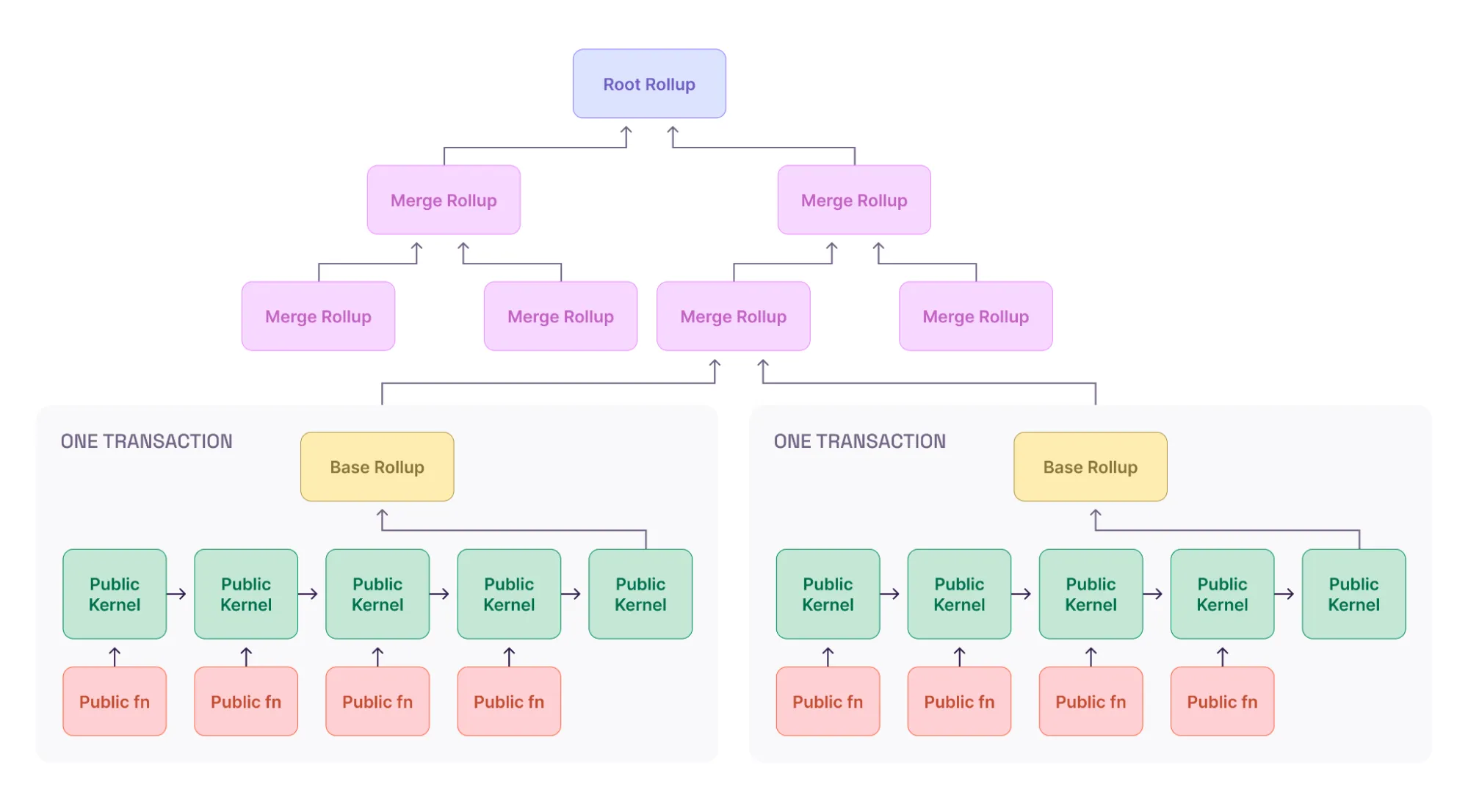

In particular, it means the sequencer executes every public function and the prover creates a proof of each function’s correct execution. That proof is aggregated into a kernel proof. Each kernel proof is aggregated into another kernel proof and so on (i.e. as a chain of kernel proofs). The final kernel proof is aggregated into a base rollup proof. The base rollup proofs are aggregated into pairs in a tree-like structure. The root of this tree is the final block proof.

Desired properties

There is a row of desired properties assigned to the prover mechanism. Among those:

- Permissionlesness – anyone can run an Aztec prover.

- The prover of each block can be recognized to be rewarded or slashed by the protocol.

- Recovery mechanism in case provers stop supplying proofs.

- Flexibility for future cryptography improvements.

Prover mechanism

The chosen prover mechanism is called “Sidecar” and was suggested by Cooper Kunz.

- It is a minimally enshrined commitment and slashing scheme that facilitates the sequencer outsourcing proving rights to anyone, given an out-of-protocol prover marketplace. This allows sequencers to leverage reputation or other off-protocol information to make their choice.

- In particular, it means anyone can take a prover role. For example, it can be a specialized proving marketplace, or a vertically integrated sequencer’s prover, or an individual independent prover.

- After the sequencer chooses its prover, there is a Prover Commitment Phase by the end of which any sequencer who believes they have a chance to win block proposal rights must signal via an L1 transaction the prover’s Ethereum address and the prover specifies its deposit.

- After the prover commits, the block content is revealed by the sequencer. Going with this specific order (i.e. first prover commitment then revealing block content) allows one to mitigate potential MEV-stealing (if sequencers have to publish all data to a DA layer before the commitment) and proof withholding attacks (i.e. putting up a block proposal that seems valid but never revealing the underlying data required to verify it).

- The prover operates outside of Aztec protocol and the Aztec network. Hence, after the prover commitment stage, the protocol simply waits a predetermined amount of time for the proof submission phase to begin.

How Sidecar meets required properties

Conclusion

Decentralization is neither a sentiment nor a meme. It’s one of the core milestones on the way to credible neutrality. And credible neutrality is one of the core milestones on the way to a long-lasting, secure, and robust Ethereum ecosystem.

If the network is not credibly neutral, the safety of users’ funds cannot be long-term guaranteed. Furthermore, if the network is not credibly neutral, the developers building on top of the network can’t be sure that the network will be there for them tomorrow, the day after tomorrow, in a year, in ten years, etc. They have to trust the network team that they are good, reliable people, and will continue maintaining the network and will fulfill all their promises. But what if that is not the case? Good intentions of a small number of people are not enough to secure hundreds of dapps, the thousands of developers building them, and the millions of users using them. The network should be designed in a credibly neutral way from the first to the last bit. Without compromises, without speculation, without promises.

That is what we are working on at Aztec Labs: systematically decentralizing all of the network’s components (e.g. sequencer and prover) with the help and support of a wide community (e.g. through RFP and RFC mechanisms) and top-notch partners (e.g. Block Science).

That is why, especially in the early days, Aztec prioritizes safety over other properties (e.g. impossibility of reorg attacks by design and unrolling upgrade mechanism allowing sequencers to have enough time to battle-test the mechanism before any assets come to the network).

Besides technical and economical decentralization, Aztec also considers its legal aspect that comes in the form of a foundation that is a suitable vehicle to promote decentralization.

If you want to contribute to Aztec’s decentralization – fill in the form.

This was the first part of the piece on Aztec’s decentralization. In the second part (coming soon), we will cover the upgrade mechanism.

Sources:

- An article “Aztec X BlockScience Report: RfP - Sequencer Selection”

- An article “Fernet”

- A forum post “[Proposal] Prover Coordination: Sidecar”

- A forum post “Decentralized crypto needs you: to be a geographical decentralization maxi”

- A forum post “Request for Comments: Aztec Sequencer Selection and Prover Coordination Protocols”