The first decentralized L2 on Ethereum reaches 75k block height with 30M $AZTEC distributed through block rewards.

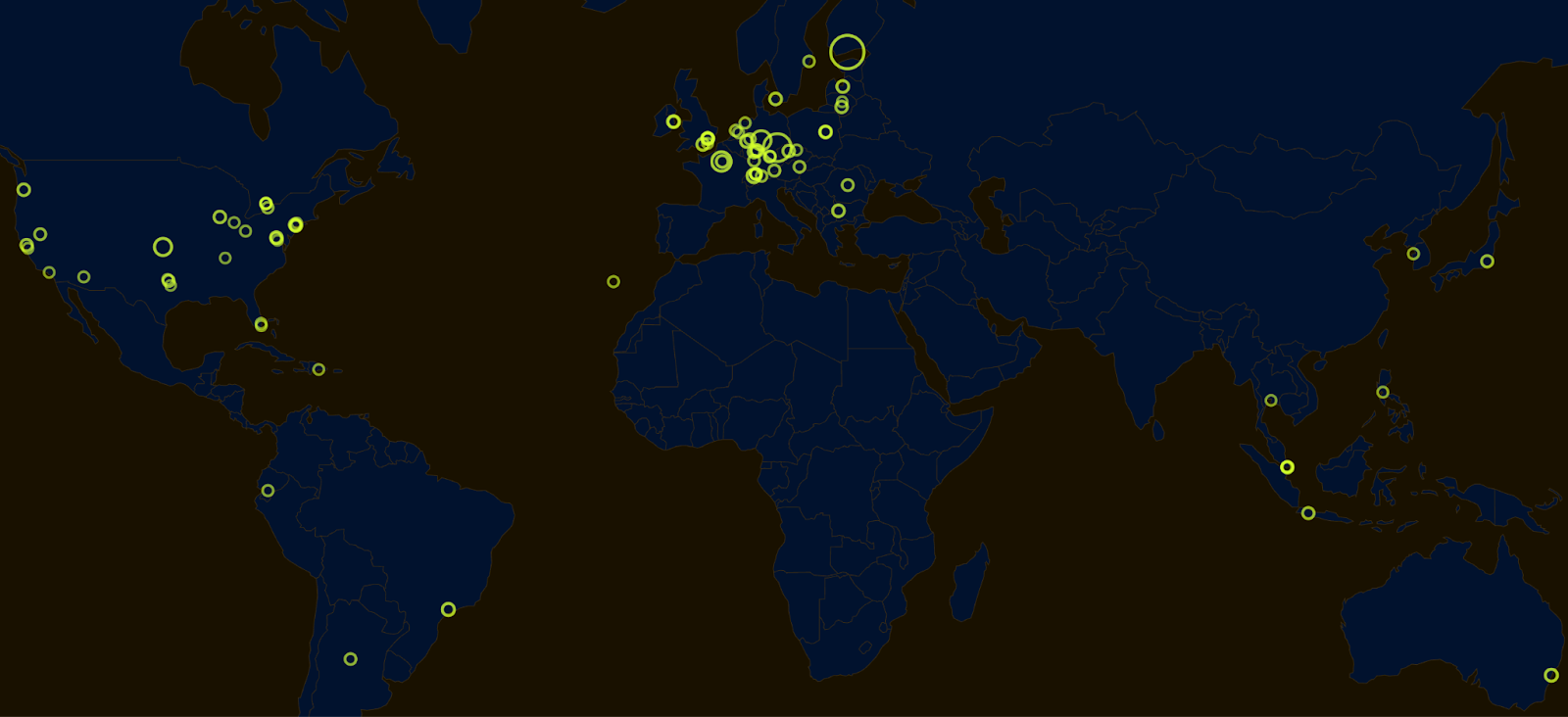

In November 2025, the Aztec Ignition Chain went live as the first decentralized L2 on Ethereum. Since launch, more than 185 operators across 5 continents have joined the network, with 3,400+ sequencers now running. The Ignition Chain is the backbone of the Aztec Network; true end-to-end programmable privacy is only possible when the underlying network is decentralized and permissionless.

Until now, only participants from the $AZTEC token sale have been able to stake and earn block rewards ahead of Aztec's upcoming Token Generation Event (TGE), but that's about to change. Keep reading for an update on the state of the network and learn how you can spin up your own sequencer or start delegating your tokens to stake once TGE goes live.

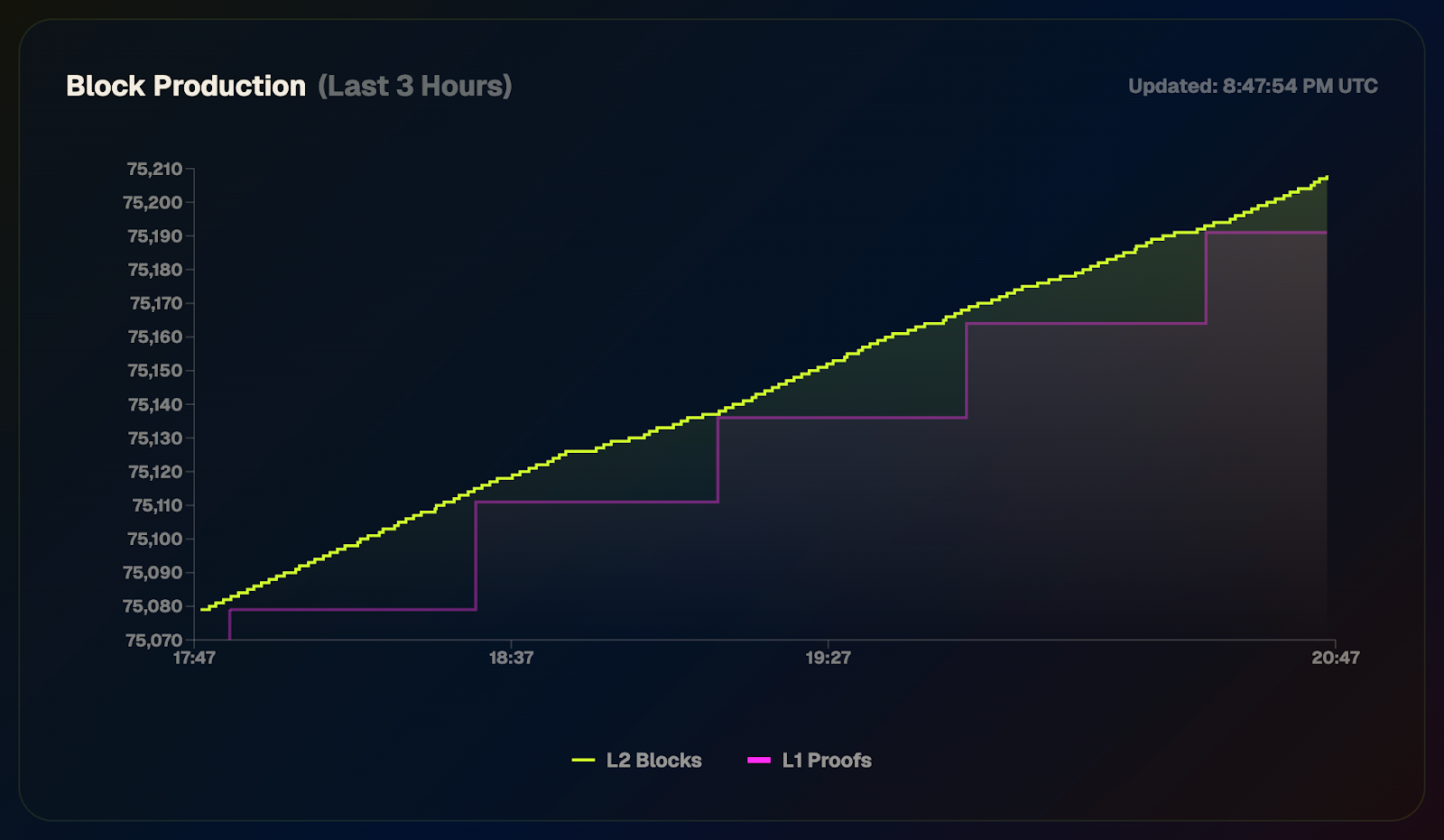

The Ignition Chain launched to prove the stability of the consensus layer before the execution environment ships, which will enable privacy-preserving smart contracts. The network has remained healthy, crossing a block height of 75k blocks with zero downtime. That includes navigating Ethereum's major Fusaka upgrade in December 2025 and a governance upgrade to increase the queue speed for joining the sequencer set.

Over 30M $AZTEC tokens have been distributed to sequencers and provers to date. Block rewards go out every epoch (every 32 blocks), with 70% going to sequencers and 30% going to provers for generating block proofs.

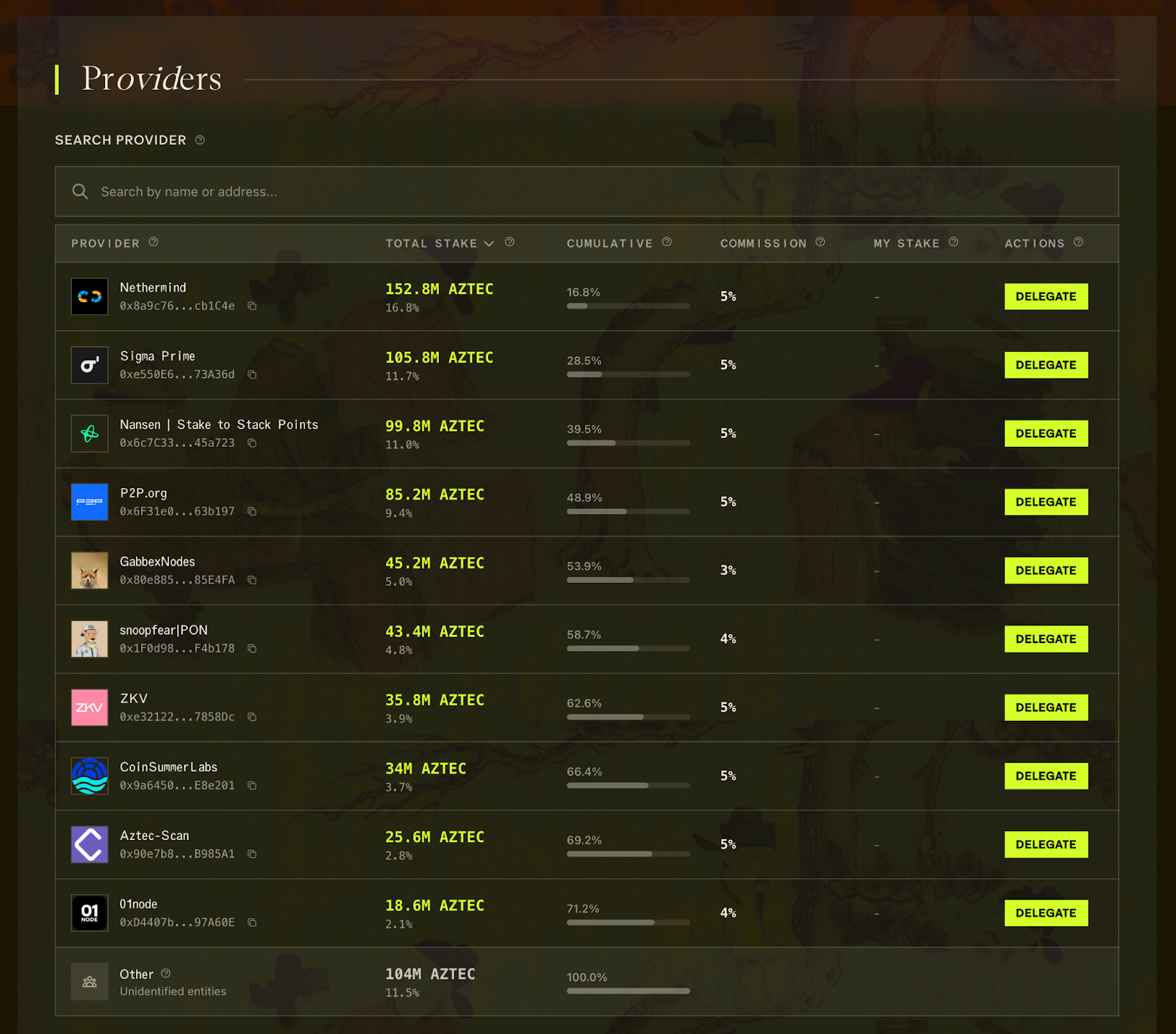

If you don't want to run your own node, you can delegate your stake and share in block rewards through the staking dashboard. Note that fractional staking is not currently supported, so you'll need 200k $AZTEC tokens to stake.

The Ignition Chain launched as a decentralized network from day one. The Aztec Labs and Aztec Foundation teams are not running any sequencers on the network or participating in governance. This is your network.

Anyone who purchased 200k+ tokens in the token sale can stake or delegate their tokens on the staking dashboard. Over 180 operators are now running sequencers, with more joining daily as they enter the sequencer set from the queue. And it's not just sequencers: 50+ provers have joined the permissionless, decentralized prover network to generate block proofs.

These operators span the globe, from solo stakers to data centers, from Australia to Portugal.

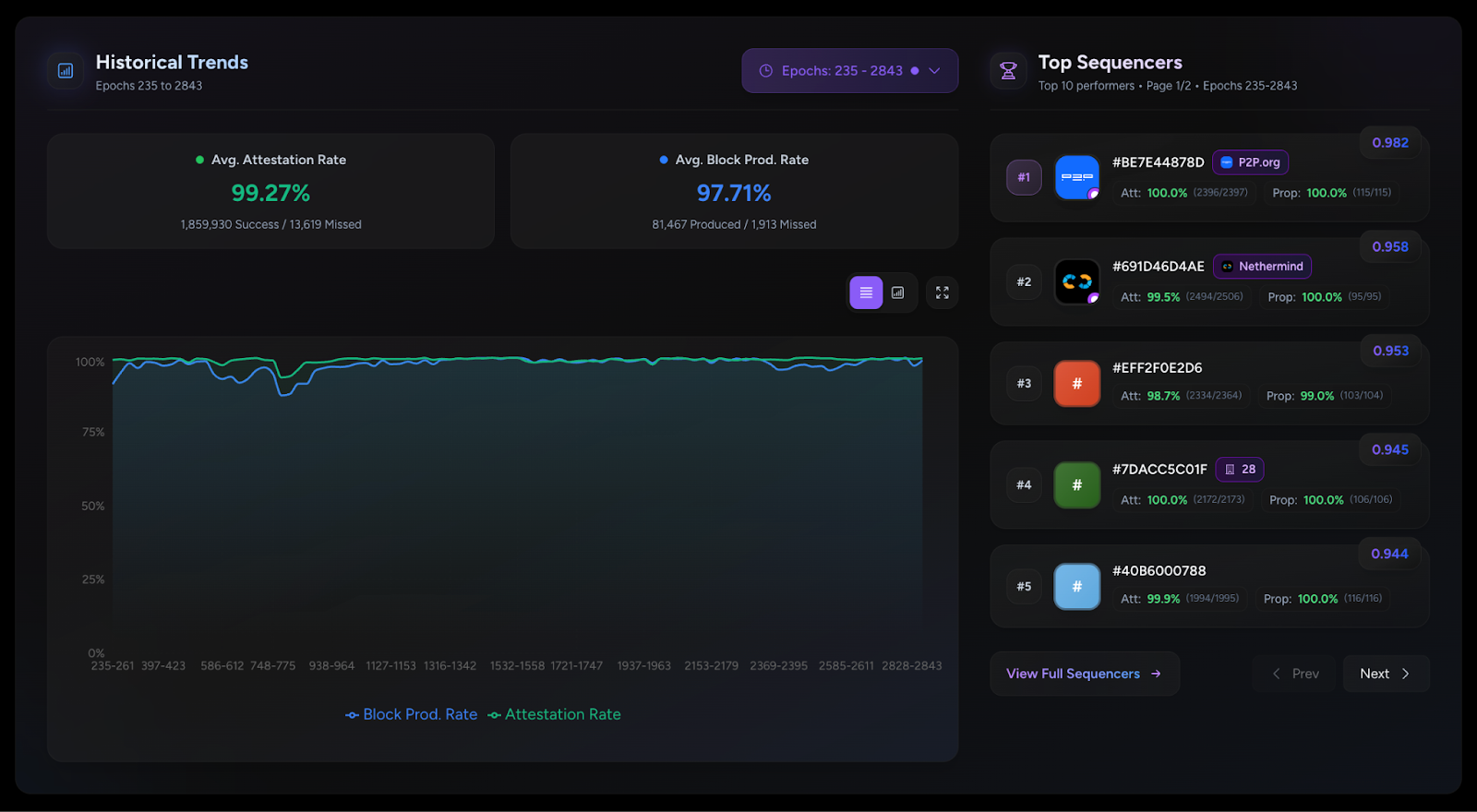

Participating sequencers have maintained a 99%+ attestation rate since network launch, demonstrating strong commitment and network health. Top performers include P2P.org, Nethermind, and ZKV. You can see all block activity and staker performance on the Dashtec dashboard.

On January 26th, 2026, the community passed a governance proposal for TGE. This makes tokens tradable and unlocks the AZTEC/ETH Uniswap pool as early as February 11, 2026. Once that happens, anyone with 200k $AZTEC tokens can run a sequencer or delegate their stake to participate in block rewards.

Here's what you need to run a validator node:

These are accessible specs for most solo stakers. If you've run an Ethereum validator before, you're already well-equipped.

To get started, head to the Aztec docs for step-by-step instructions on setting up your node. You can also join the Discord to connect with other operators, ask questions, and get support from the community. Whether you run your own hardware or delegate to an experienced operator, you're helping build the infrastructure for a privacy-preserving future.

Solo stakers are the beating heart of the Aztec Network. Welcome aboard.

The $AZTEC token sale was the first of its kind, conducted entirely onchain with ~50% of the capital committed coming from the community. The sale was conducted completely onchain to ensure that you have control over your tokens from day one. As we approach the TGE vote, all token sale participants will be able to vote to unlock their tokens and make them tradable.

Immediately following the $AZTEC token sale, tokens could be withdrawn from the sale website into your personal Token Vault smart contracts on the Ethereum mainnet. Right now, token holders are not able to transfer or trade these tokens.

The TGE is a governance vote that decides when to unlock these tokens. If the vote passes, three things happen:

This decision is entirely in the hands of $AZTEC token holders. The Aztec Labs and Aztec Foundation teams, and investors cannot participate in staking or governance for 12 months, which includes the TGE governance proposal. Team and investor tokens will also remain locked for 1 year and then slowly unlock over the next 2 years.

The proposal for TGE is now live, and sequencers are already signaling to bring the proposal to a vote. Once enough sequencers have signaled, anyone who participated in the token sale will be able to connect their Token Vault contract to the governance dashboard to vote. Note, this will require you to stake/unstake and follow the regular 15-day process to withdraw tokens.

If the vote passes, TGE can go live as early as February 12, 2026, at 7am UTC. TGE can be executed by the first person to call the execute function to execute the proposal after the time above.

If you participated in the token sale, you don't have to do anything if you prefer not to vote. If the vote passes, your tokens will become available to trade at TGE. If you want to vote, the process happens in two phases:

Sequencers kick things off by signaling their support. Once 600 out of 1,000 sequencers signal, the proposal moves to a community vote.

After sequencers create the proposal, all Token Vault holders can vote using the voting governance dashboard. Please note that anyone who wants to vote must stake their tokens, locking their tokens for at least 15 days to ensure the proposal can be executed before the voter exits. Once signaling is complete, the timeline is as follows:

Vote Requirements:

Do I need to participate in the vote? No. If you don't vote, your tokens will become available for trading when TGE goes live.

Can I vote if I have less than 200,000 tokens? Yes! Anyone who participated in the token sale can participate in the TGE vote. You'll need to connect your wallet to the governance dashboard to vote.

Is there a withdrawal period for my tokens after I vote? Yes. If you participate in the vote, you will need to withdraw your tokens after voting. Voters can initiate a withdrawal of their tokens immediately after voting, but require a standard 15-day withdrawal period to ensure the vote is executed before voters can exit.

If I have over 200,000 tokens is additional action required to make my tokens tradable after TGE? Yes. If you purchased over 200,000 $AZTEC tokens, you will need to stake your tokens before they become tradable.

What if the vote fails? A new proposal can be submitted. Your tokens remain locked until a successful vote is completed, or the fallback date of November 13, 2026, whichever happens first.

I'm a Genesis sequencer. Does this apply to me? Genesis sequencer tokens cannot be unlocked early. You must wait until November 13, 2026, to withdraw. However, you can still influence the vote by signaling, earn block rewards, and benefit from trading being enabled.

This overview covers the essentials, but the full technical proposal includes contract addresses, code details, and step-by-step instructions for sequencers and advanced users.

Read the complete proposal on the Aztec Forum and join us for the Privacy Rabbit Hole on Discord happening this Thursday, January 22, 2026, at 15:00 UTC.

Follow Aztec on X to stay up to date on the latest developments.

The $AZTEC token sale was conducted entirely onchain to maximize transparency and fair distribution. Next steps for holders are as follows:

The $AZTEC token sale has come to a close– the sale was conducted entirely onchain, and the power is now in your hands. Over 16.7k people participated, with 19,476 ETH raised. A huge thank you to our community and everyone who participated– you all really showed up for privacy. 50% of the capital committed has come from the community of users, testnet operators and creators!

Now that you have your tokens, what’s next? This guide walks you through the next steps leading up to TGE, showing you how to withdraw, stake, and vote with your tokens.

The $AZTEC sale was conducted onchain to ensure that you have control over your own tokens from day 1 (even before tokens become transferable at TGE).

The team has no control over your tokens. You will be self-custodying them in a smart contract known as the Token Vault on the Ethereum mainnet ahead of TGE.

Your Token Vault contract will:

To create and withdraw your tokens to your Token Vault, simply go to the sale website and click on ‘Create Token Vault.’ Any unused ETH from your bids will be returned to your wallet in the process of creating your Token Vault.

If you have 200,000+ tokens, you are eligible to start staking and earning block rewards today.

You can stake by connecting your Token Vault to the staking dashboard, just select a provider to delegate your stake. Alternatively, you can run your own sequencer node.

If your Token Vault holds 200,000+ tokens, you must stake in order to withdraw your tokens after TGE. If your Token Vault holds less than 200,000 tokens, you can withdraw without any additional steps at TGE

Fractional staking for anyone with less than 200,000 tokens is not currently supported, but multiple external projects are already working to offer this in the future.

TGE is triggered by an onchain governance vote, which can happen as early as February 11th, 2026.

At TGE, 100% of tokens from the token sale will be transferable. Only token sale participants and genesis sequencers can participate in the TGE vote, and only tokens purchased in the sale will become transferrable.

Community members discuss potential votes on the governance forum. If the community agrees, sequencers signal to start a vote with their block proposals. Once enough sequencers agree, the vote goes onchain for eligible token holders.

Voting lasts 7 days, requires participation of at least 100,000,000 $AZTEC tokens, and passes if 2/3 vote yes.

Following a successful yes vote, anyone can execute the proposal after a 7-day execution delay, triggering TGE.

At TGE, the following tokens will be 100% unlocked and available for trading:

Join us Thursday, December 11th at 3 pm UTC for the next Discord Town Hall–AMA style on next steps for token holders. Follow Aztec on X to stay up to date on the latest developments.

We invented the math. We wrote the language. Proved the concept and now, we’re opening registration and bidding for the $AZTEC token today, starting at 3 pm CET.

The community-first distribution offers a starting floor price based on a $350 million fully diluted valuation (FDV), representing an approximate 75% discount to the implied network valuation (based on the latest valuation from Aztec Labs’ equity financings). The auction also features per-user participation caps to give community members genuine, bid-clearing opportunities to participate daily through the entirety of the auction.

The token auction portal is live at: sale.aztec.network

We’ve taken the community access that made the 2017 ICO era great and made it even better.

For the past several months, we've worked closely with Uniswap Labs as core contributors on the CCA protocol, a set of smart contracts that challenge traditional token distribution mechanisms to prioritize fair access, permissionless, on-chain access to community members and the general public pre-launch. This means that on day 1 of the unlock, 100% of the community's $AZTEC tokens will be unlocked.

This model is values-aligned with our Core team and addresses the current challenges in token distribution, where retail participants often face unfair disadvantages against whales and institutions that hold large amounts of money.

Early contributors and long-standing community members, including genesis sequencers, OG Aztec Connect users, network operators, and community members, can start bidding today, ahead of the public auction, giving those who are whitelisted a head start and early advantage for competitive pricing. Community members can participate by visiting the token sale site to verify eligibility and mint a soul-bound NFT that confirms participation rights.

To read more about Aztec’s fair-access token sale, visit the economic and technical whitepapers and the token regulatory report.

Discount Price Disclaimer: Any reference to a prior valuation or percentage discount is provided solely to inform potential purchasers of how the initial floor price for the token sale was calculated. Equity financing valuations were determined under specific circumstances that are not comparable to this offering. They do not represent, and should not be relied upon as, the current or future market value of the tokens, nor as an indication of potential returns. The price of tokens may fluctuate substantially, the token may lose its value in part or in full, and purchasers should make independent assessments without reliance on past valuations. No representation or warranty is made that any purchaser will achieve profits or recover the purchase price.

Information for Persons in the UK: This communication is directed only at persons outside the UK. Persons in the UK are not permitted to participate in the token sale and must not act upon this communication.

MiCA Disclaimer: Any crypto-asset marketing communications made from this account have not been reviewed or approved by any competent authority in any Member State of the European Union. Aztec Foundation as the offeror of the crypto-asset is solely responsible for the content of such crypto-asset marketing communications. The Aztec MiCA white paper has been published and is available here. The Aztec Foundation can be contacted at hello@aztec.foundation or +41 41 710 16 70. For more information about the Aztec Foundation, visit https://aztec.foundation.

Every time you swap tokens on Uniswap, deposit into a yield vault, or vote in a DAO, you're broadcasting your moves to the world. Anyone can see what you own, where you trade, how much you invest, and when you move your money.

Tracking and analysis tools like Chainalysis and TRM are already extremely advanced, and will only grow stronger with advances in AI in the coming years. The implications of this are that the ‘pseudo-anonymous’ wallets on Ethereum are quickly becoming linked to real-world identities. This is concerning for protecting your personal privacy, but it’s also a major blocker in bringing institutions on-chain with full compliance for their users.

Until now, your only option was to abandon your favorite apps and move to specialized privacy-focused apps or chains with varying degrees of privacy. You'd lose access to the DeFi ecosystem as you know it now, the liquidity you depend on, and the community you're part of.

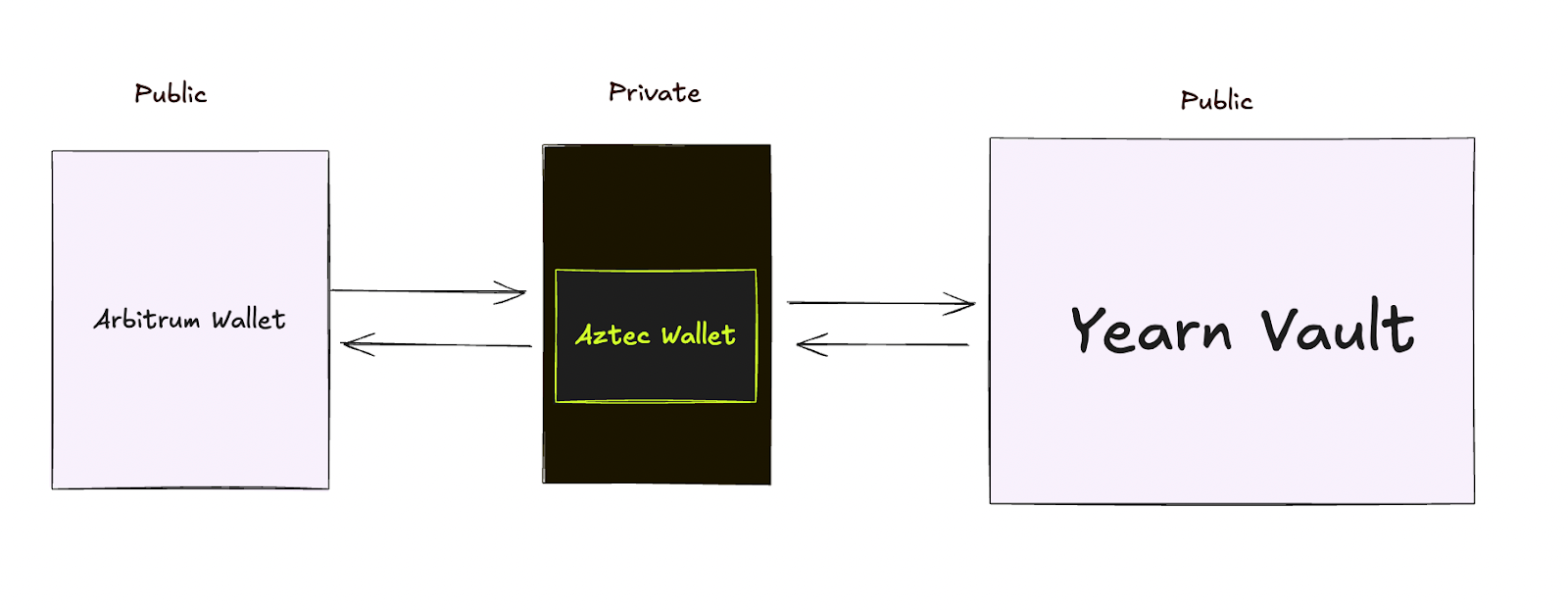

What if you could keep using Uniswap, Aave, Yearn, and every other app you love, but with your identity staying private? No switching chains. Just an incognito mode for your existing on-chain life?

If you’ve been following Aztec for a while, you would be right to think about Aztec Connect here, which was hugely popular with $17M TVL and over 100,000 active wallets, but was sunset in 2024 to focus on bringing a general-purpose privacy network to life.

Read on to learn how you’ll be able to import privacy to any L2, using one of the many privacy-focused bridges that are already built.

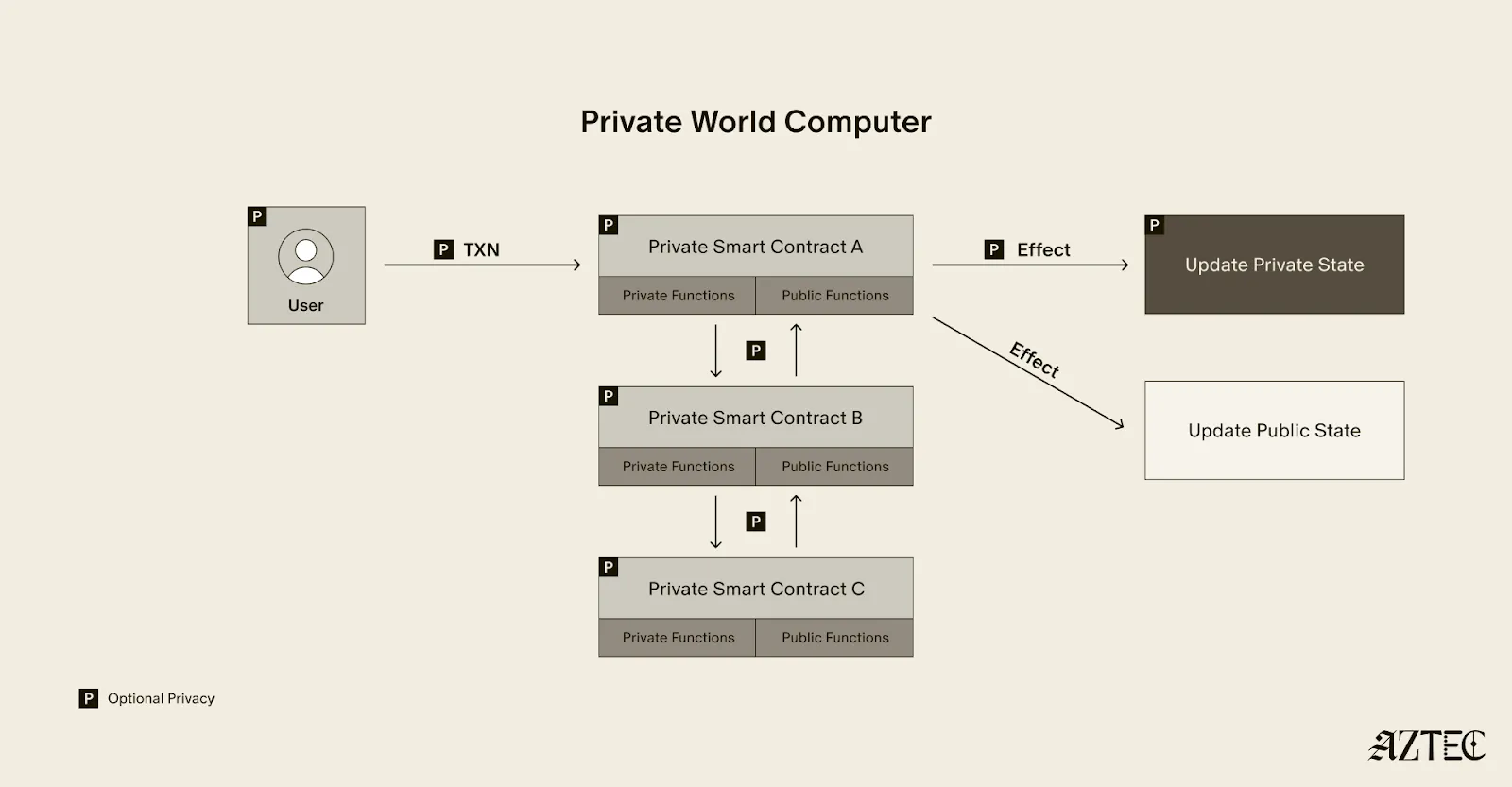

Aztec is a fully decentralized, privacy-preserving L2 on Ethereum. You can think of Aztec as a private world computer with full end-to-end programmable privacy. A private world computer extends Ethereum to add optional privacy at every level, from identity and transactions to the smart contracts themselves.

On Aztec, every wallet is a smart contract that gives users complete control over which aspects they want to make public or keep private.

Aztec is currently in Testnet, but will have multiple privacy-preserving bridges live for its mainnet launch, unlocking a myriad of privacy preserving features.

Now, several bridges, including Wormhole, TRAIN, and Substance, are connecting Aztec to other chains, adding a privacy layer to the L2s you already use. Think of it as a secure tunnel between you and any DeFi app on Ethereum, Arbitrum, Base, Optimism, or other major chains.

Here's what changes: You can now use any DeFi protocol without revealing your identity. Furthermore, you can also unlock brand new features that take advantage of Aztec’s private smart contracts, like private DAO voting or private compliance checks.

Here's what you can do:

The apps stay where they are. Your liquidity stays where it is. Your community stays where it is. You just get a privacy upgrade.

Let's follow Alice through a real example.

Alice wants to invest $1,000 USDC into a yield vault on Arbitrum without revealing her identity.

Alice moves her funds into Aztec's privacy layer. This could be done in one click directly in the app that she’s already using if the app has integrated one of the bridges. Think of this like dropping a sealed envelope into a secure mailbox. The funds enter a private space where transactions can't be tracked back to her wallet.

Aztec routes Alice's funds to the Yearn vault on Arbitrum. The vault sees a deposit and issues yield-earning tokens. But there's no way to trace those tokens back to Alice's original wallet. Others can see someone made a deposit, but they have no idea who.

The yield tokens arrive in Alice's private Aztec wallet. She can hold them, trade them privately, or eventually withdraw them, without anyone connecting the dots.

Alice is earning yield on Arbitrum using the exact same vault as everyone else. But while other users broadcast their entire investment strategy, Alice's moves remain private.

The difference looks like this:

Without privacy: "Wallet 0x742d...89ab deposited $5,000 into Yearn vault at 2:47 PM"

With Aztec privacy: "Someone deposited funds into Yearn vault" (but who? from where? how much? unknowable).

In the future, we expect apps to directly integrate Aztec, making this experience seamless for you as a user.

While Aztec is still in Testnet, multiple teams are already building bridges right now in preparation for the mainnet launch.

Projects like Substance Labs, Train, and Wormhole are creating connections between Aztec and major chains like Optimism, Unichain, Solana, and Aptos. This means you'll soon have private access to DeFi across nearly every major ecosystem.

Aztec has also launched a dedicated cross-chain catalyst program to support developers with grants to build additional bridges and apps.

L2s have sometimes received criticism for fragmenting liquidity across chains. Aztec is taking a different approach. Instead, Aztec is bringing privacy to the liquidity that already exists. Your funds stay on Arbitrum, Optimism, Base, wherever the deepest pools and best apps already live. Aztec doesn't compete for liquidity, it adds privacy to existing liquidity.

You can access Uniswap's billions in trading volume. You can tap into Aave's massive lending pools. You can deposit into Yearn's established vaults, all without moving liquidity away from where it's most useful.

We’re rolling out a new approach to how we think about L2s on Ethereum. Rather than forcing users to choose between privacy and access to the best DeFi applications, we’re making privacy a feature you can add to any protocol you're already using. As more bridges go live and applications integrate Aztec directly, using DeFi privately will become as simple as clicking a button—no technical knowledge required, no compromise on the apps and liquidity you depend on.

While Aztec is currently in testnet, the infrastructure is rapidly taking shape. With multiple bridge providers building connections to major chains and a dedicated catalyst program supporting developers, the path to mainnet is clear. Soon, you'll be able to protect your privacy while still participating fully in the Ethereum ecosystem.

If you’re a developer and want a full technical breakdown, check out this post. To stay up to date with the latest updates for network operators, join the Aztec Discord and follow Aztec on X.

Privacy has emerged as a major driver for the crypto industry in 2025. We’ve seen the explosion of Zcash, the Ethereum Foundation’s refocusing of PSE, and the launch of Aztec’s testnet with over 24,000 validators powering the network. Many apps have also emerged to bring private transactions to Ethereum and Solana in various ways, and exciting technologies like ZKPassport that privately bring identity on-chain using Noir have become some of the most talked about developments for ushering in the next big movements to the space.

Underpinning all of these developments is the emerging consensus that without privacy, blockchains will struggle to gain real-world adoption.

Without privacy, institutions can’t bring assets on-chain in a compliant way or conduct complex swaps and trades without revealing their strategies. Without privacy, DeFi remains dominated and controlled by advanced traders who can see all upcoming transactions and manipulate the market. Without privacy, regular people will not want to move their lives on-chain for the entire world to see every detail about their every move.

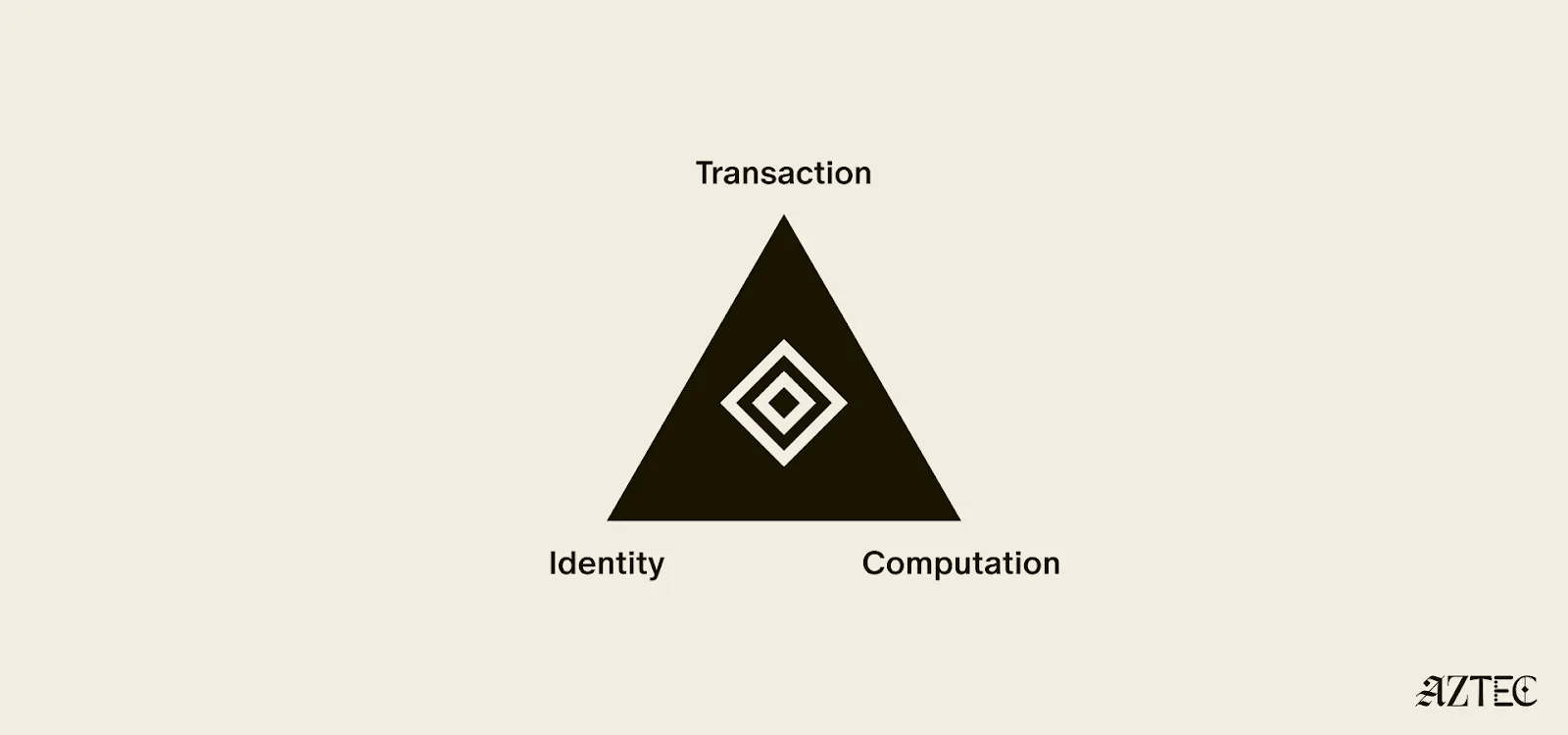

While there's been lots of talk about privacy, few can define it. In this piece we’ll outline the three pillars of privacy and gives you a framework for evaluating the privacy claims of any project.

True privacy rests on three essential pillars: transaction privacy, identity privacy, and computational privacy. It is only when we have all three pillars that we see the emergence of a private world computer.

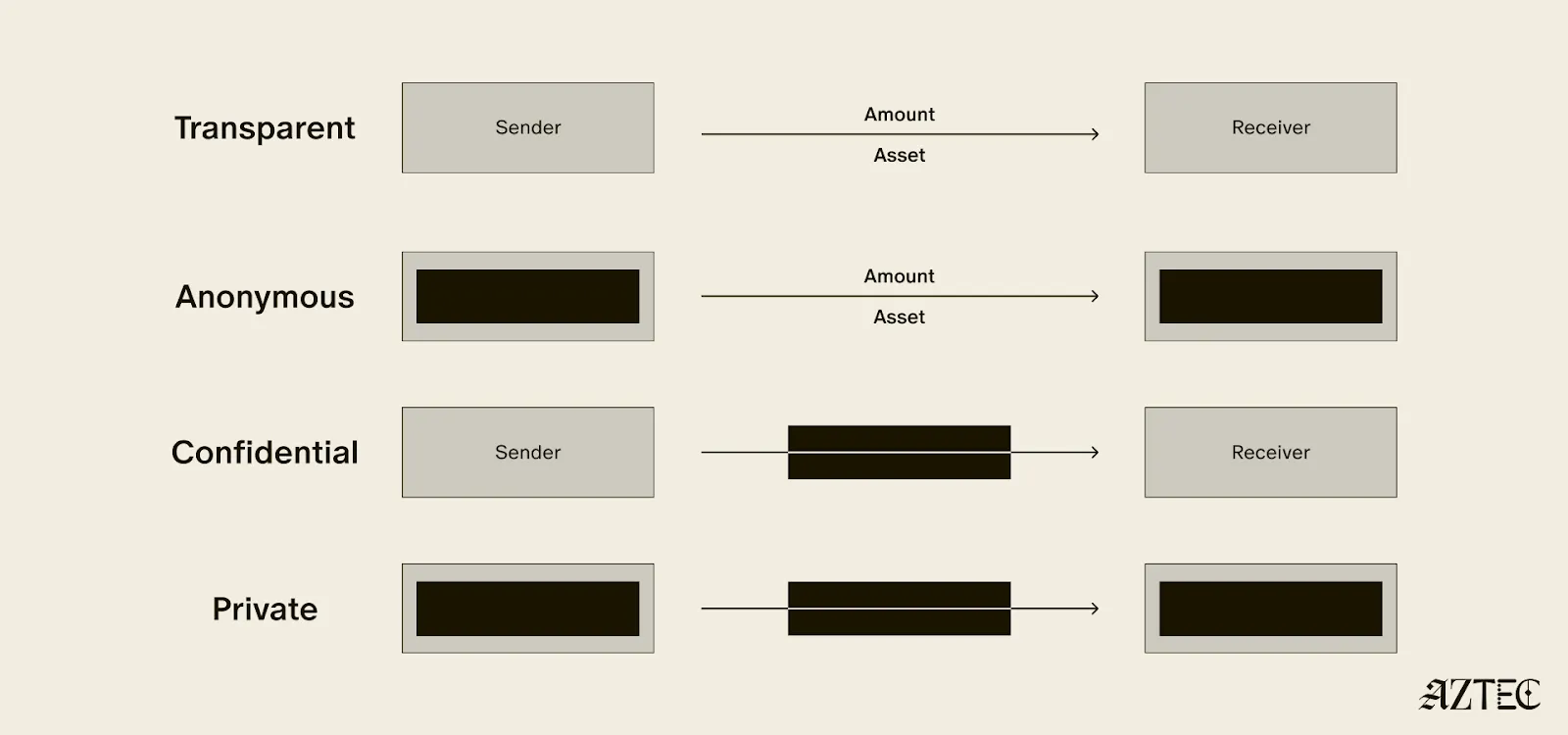

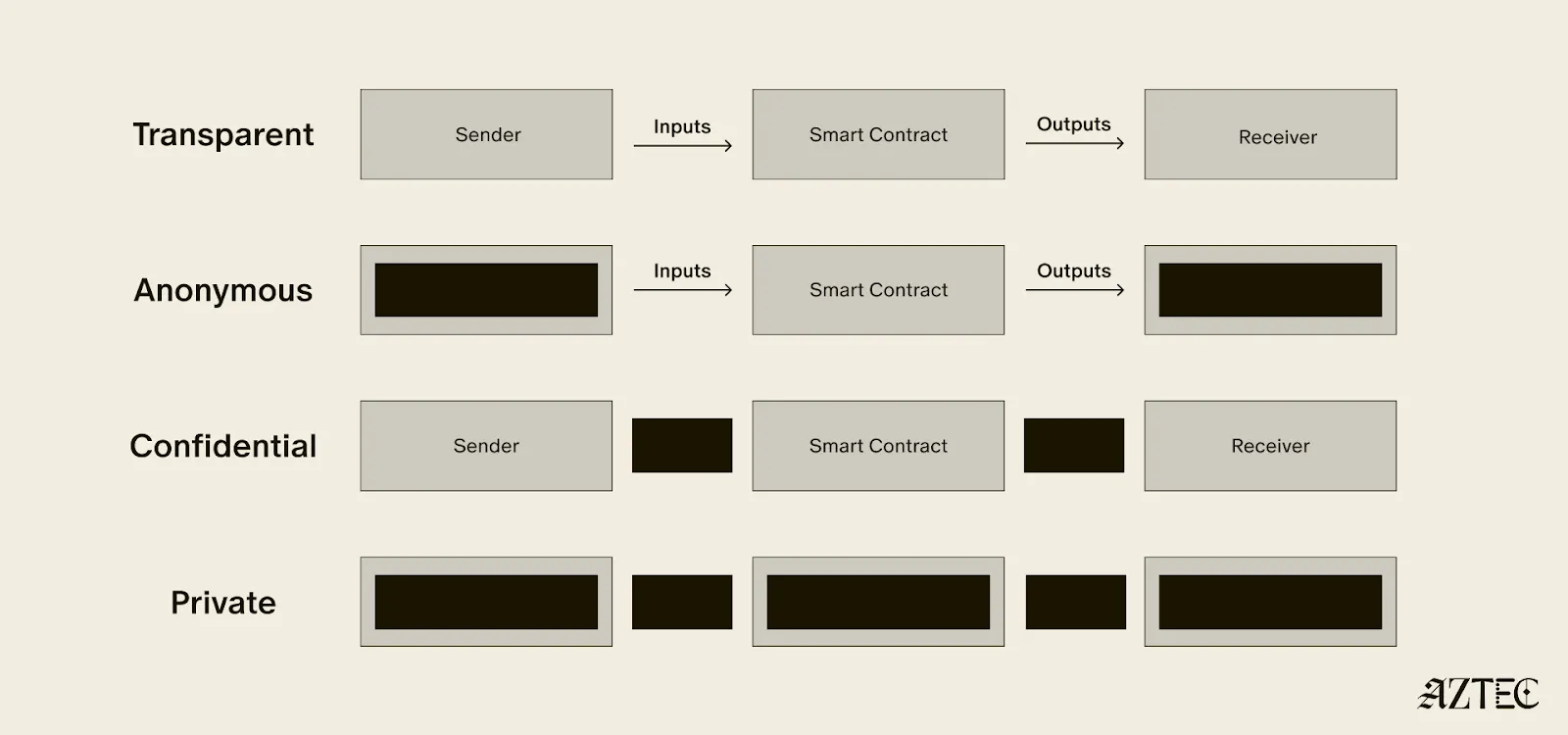

Transaction privacy means that both inputs and outputs are not viewable by anyone other than the intended participants. Inputs include any asset, value, message, or function calldata that is being sent. Outputs include any state changes or transaction effects, or any transaction metadata caused by the transaction. Transaction privacy is often primarily achieved using a UTXO model (like Zcash or Aztec’s private state tree). If a project has only the option for this pillar, it can be said to be confidential, but not private.

Identity privacy means that the identities of those involved are not viewable by anyone other than the intended participants. This includes addresses or accounts and any information about the identity of the participants, such as tx.origin, msg.sender, or linking one’s private account to public accounts. Identity privacy can be achieved in several ways, including client-side proof generation that keeps all user info on the users’ devices. If a project has only the option for this pillar, it can be said to be anonymous, but not private.

Computation privacy means that any activity that happens is not viewable by anyone other than the intended participants. This includes the contract code itself, function execution, contract address, and full callstack privacy. Additionally, any metadata generated by the transaction is able to be appropriately obfuscated (such as transaction effects, events are appropriately padded, inclusion block number are in appropriate sets). Callstack privacy includes which contracts you call, what functions in those contracts you’ve called, what the results of those functions were, any subsequent functions that will be called after, and what the inputs to the function were. A project must have the option for this pillar to do anything privately other than basic transactions.

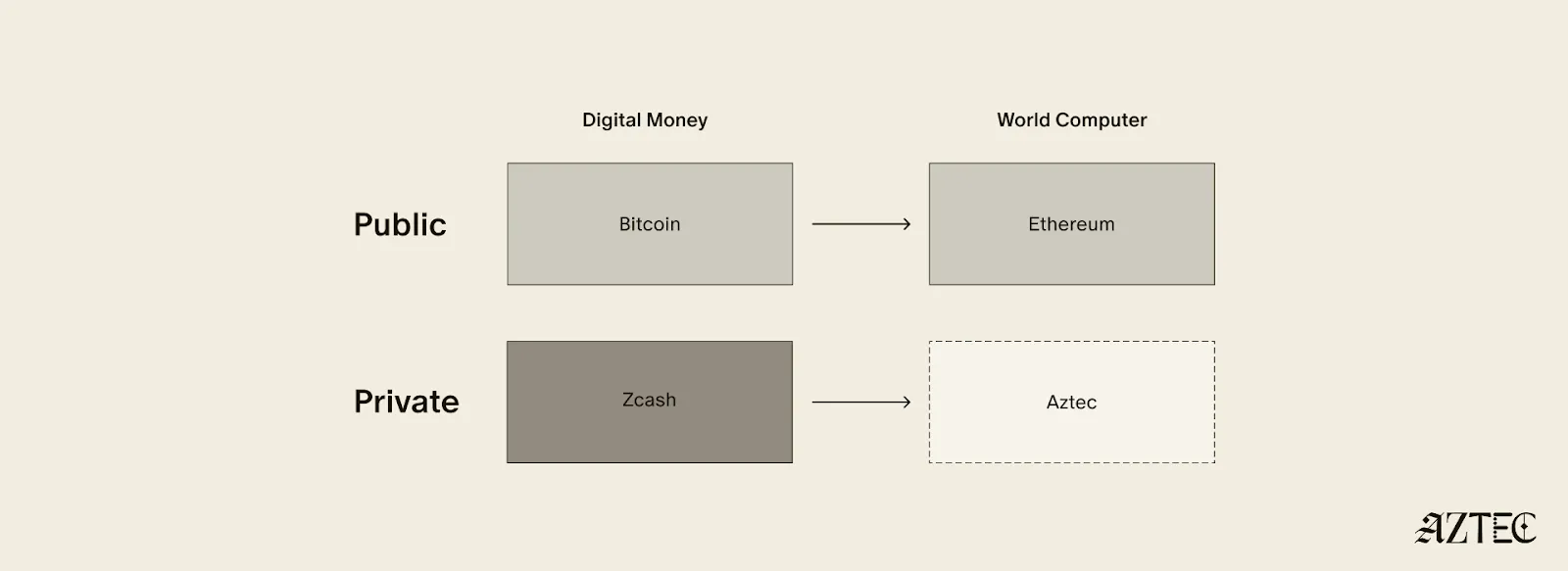

Bitcoin ushered in a new paradigm of digital money. As a permissionless, peer-to-peer currency and store of value, it changed the way value could be sent around the world and who could participate. Ethereum expanded this vision to bring us the world computer, a decentralized, general-purpose blockchain with programmable smart contracts.

Given the limitations of running a transparent blockchain that exposes all user activity, accounts, and assets, it was clear that adding the option to preserve privacy would unlock many benefits (and more closely resemble real cash). But this was a very challenging problem. Zcash was one of the first to extend Bitcoin’s functionality with optional privacy, unlocking a new privacy-preserving UTXO model for transacting privately. As we’ll see below, many of the current privacy-focused projects are working on similar kinds of private digital money for Ethereum or other chains.

Now, Aztec is bringing us the final missing piece: a private world computer.

A private world computer is fully decentralized, programmable, and permissionless like Ethereum and has optional privacy at every level. In other words, Aztec is extending all the functionality of Ethereum with optional transaction, identity, and computational privacy. This is the only approach that enables fully compliant, decentralized applications to be built that preserve user privacy, a new design space that we see as ushering in the next Renaissance for the space.

Private digital money emerges when you have the first two privacy pillars covered - transactions and identity - but you don’t have the third - computation. Almost all projects today that claim some level of privacy are working on private digital money. This includes everything from privacy pools on Ethereum and L2s to newly emerging payment L1s like Tempo and Arc that are developing various degrees of transaction privacy

When it comes to digital money, privacy exists on a spectrum. If your identity is hidden but your transactions are visible, that's what we call anonymous. If your transactions are hidden but your identity is known, that's confidential. And when both your identity and transactions are protected, that's true privacy. Projects are working on many different approaches to implement this, from PSE to Payy using Noir, the zkDSL built to make it intuitive to build zk applications using familiar Rust-like syntax.

Private digital money is designed to make payments private, but any interaction with more complex smart contracts than a straightforward payment transaction is fully exposed.

What if we also want to build decentralized private apps using smart contracts (usually multiple that talk to each other)? For this, you need all three privacy pillars: transaction, identity, and compute.

If you have these three pillars covered and you have decentralization, you have built a private world computer. Without decentralization, you are vulnerable to censorship, privileged backdoors and inevitable centralized control that can compromise privacy guarantees.

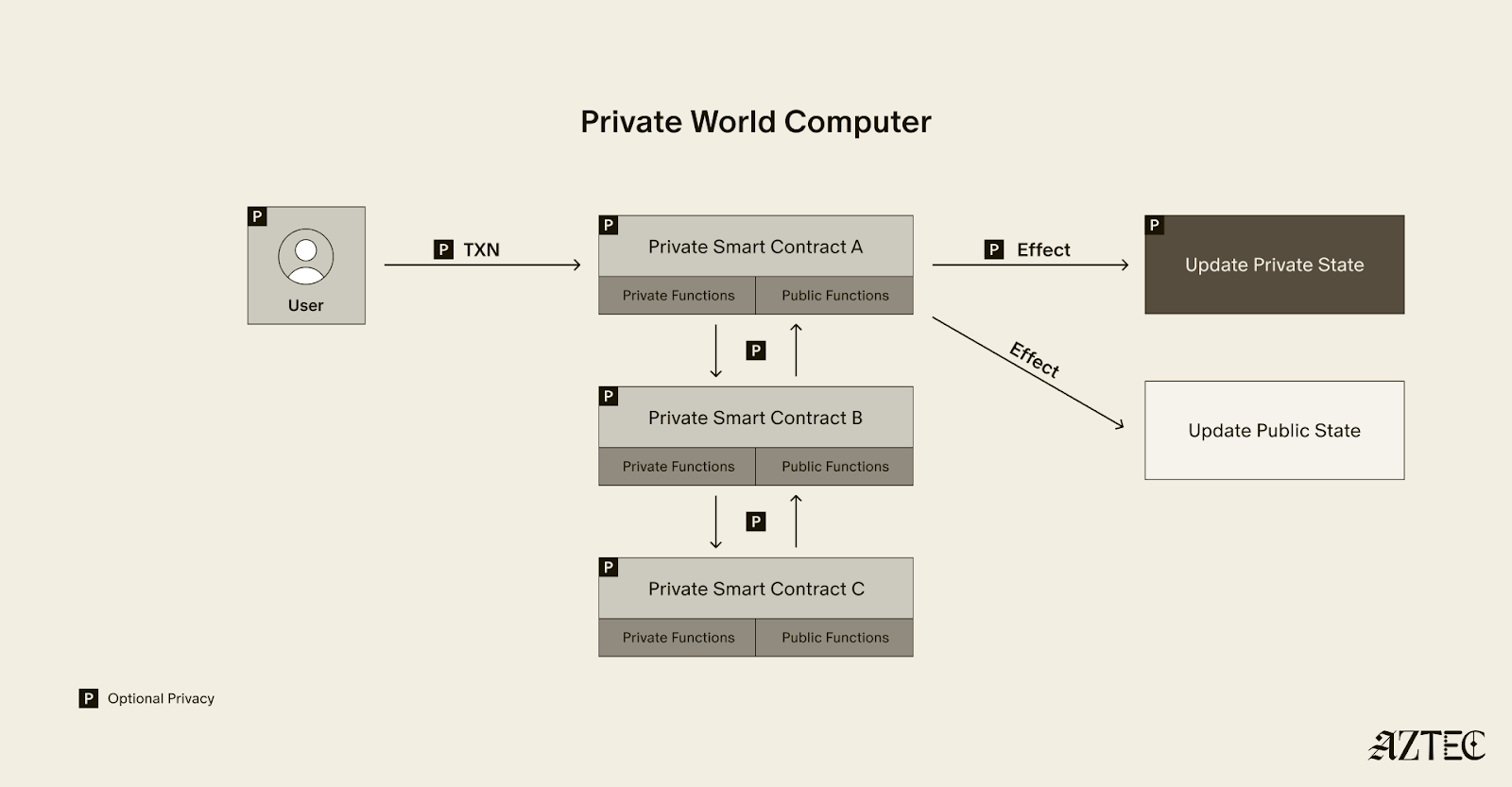

What exactly is a private world computer? A private world computer extends all the functionality of Ethereum with optional privacy at every level, so developers can easily control which aspects they want public or private and users can selectively disclose information. With Aztec, developers can build apps with optional transaction, identity, and compute privacy on a fully decentralized network. Below, we’ll break down the main components of a private world computer.

A private world computer is powered by private smart contracts. Private smart contracts have fully optional privacy and also enable seamless public and private function interaction.

Private smart contracts simply extend the functionality of regular smart contracts with added privacy.

As a developer, you can easily designate which functions you want to keep private and which you want to make public. For example, a voting app might allow users to privately cast votes and publicly display the result. Private smart contracts can also interact privately with other smart contracts, without needing to make it public which contracts have interacted.

Transaction: Aztec supports the optionality for fully private inputs, including messages, state, and function calldata. Private state is updated via a private UTXO state tree.

Identity: Using client-side proofs and function execution, Aztec can optionally keep all user info private, including tx.origin and msg.sender for transactions.

Computation: The contract code itself, function execution, and call stack can all be kept private. This includes which contracts you call, what functions in those contracts you’ve called, what the results of those functions were, and what the inputs to the function were.

A decentralized network must be made up of a permissionless network of operators who run the network and decide on upgrades. Aztec is run by a decentralized network of node operators who propose and attest to transactions. Rollup proofs on Aztec are also run by a decentralized prover network that can permissionlessly submit proofs and participate in block rewards. Finally, the Aztec network is governed by the sequencers, who propose, signal, vote, and execute network upgrades.

A private world computer enables the creation of DeFi applications where accounts, transactions, order books, and swaps remain private. Users can protect their trading strategies and positions from public view, preventing front-running and maintaining competitive advantages. Additionally, users can bridge privately into cross-chain DeFi applications, allowing them to participate in DeFi across multiple blockchains while keeping their identity private despite being on an existing transparent blockchain.

This technology makes it possible to bring institutional trading activity on-chain while maintaining the privacy that traditional finance requires. Institutions can privately trade with other institutions globally, without having to touch public markets, enjoying the benefits of blockchain technology such as fast settlement and reduced counterparty risk, without exposing their trading intentions or volumes to the broader market.

Organizations can bring client accounts and assets on-chain while maintaining full compliance. This infrastructure protects on-chain asset trading and settlement strategies, ensuring that sophisticated financial operations remain private. A private world computer also supports private stablecoin issuance and redemption, allowing financial institutions to manage digital currency operations without revealing sensitive business information.

Users have granular control over their privacy settings, allowing them to fine-tune privacy levels for their on-chain identity according to their specific needs. The system enables selective disclosure of on-chain activity, meaning users can choose to reveal certain transactions or holdings to regulators, auditors, or business partners while keeping other information private, meeting compliance requirements.

The shift from transparent blockchains to privacy-preserving infrastructure is the foundation for bringing the next billion users on-chain. Whether you're a developer building the future of private DeFi, an institution exploring compliant on-chain solutions, or simply someone who believes privacy is a fundamental right, now is the time to get involved.

Follow Aztec on X to stay updated on the latest developments in private smart contracts and decentralized privacy technology. Ready to contribute to the network? Run a node and help power the private world computer.

The next Renaissance is here, and it’s being powered by the private world computer.

After eight years of solving impossible problems, the next renaissance is here.

We’re at a major inflection point, with both our tech and our builder community going through growth spurts. The purpose of this rebrand is simple: to draw attention to our full-stack privacy-native network and to elevate the rich community of builders who are creating a thriving ecosystem around it.

For eight years, we’ve been obsessed with solving impossible challenges. We invented new cryptography (Plonk), created an intuitive programming language (Noir), and built the first decentralized network on Ethereum where privacy is native rather than an afterthought.

It wasn't easy. But now, we're finally bringing that powerful network to life. Testnet is live with thousands of active users and projects that were technically impossible before Aztec.

Our community evolution mirrors our technical progress. What started as an intentionally small, highly engaged group of cracked developers is now welcoming waves of developers eager to build applications that mainstream users actually want and need.

A brand is more than aesthetics—it's a mental model that makes Aztec's spirit tangible.

Renaissance means "rebirth"—and that's exactly what happens when developers gain access to privacy-first infrastructure. We're witnessing the emergence of entirely new application categories, business models, and user experiences.

The faces of this renaissance are the builders we serve: the entrepreneurs building privacy-preserving DeFi, the activists building identity systems that protect user privacy, the enterprise architects tokenizing real-world assets, and the game developers creating experiences with hidden information.

This next renaissance isn't just about technology—it's about the ethos behind the build. These aren't just our values. They're the shared DNA of every builder pushing the boundaries of what's possible on Aztec.

Agency: It’s what everyone deserves, and very few truly have: the ability to choose and take action for ourselves. On the Aztec Network, agency is native

Genius: That rare cocktail of existential thirst, extraordinary brilliance, and mind-bending creation. It’s fire that fuels our great leaps forward.

Integrity: It’s the respect and compassion we show each other. Our commitment to attacking the hardest problems first, and the excellence we demand of any solution.

Obsession: That highly concentrated insanity, extreme doggedness, and insatiable devotion that makes us tick. We believe in a different future—and we can make it happen, together.

Just as our technology bridges different eras of cryptographic innovation, our new visual identity draws from multiple periods of human creativity and technological advancement.

Our new wordmark embodies the diversity of our community and the permissionless nature of our network. Each letter was custom-drawn to reflect different pivotal moments in human communication and technological progress.

Together, these letters tell the story of human innovation: each era building on the last, each breakthrough enabling the next renaissance. And now, we're building the infrastructure for the one that's coming.

We evolved our original icon to reflect this new chapter while honoring our foundation. The layered diamond structure tells the story:

The architecture echoes a central plaza—the Roman forum, the Greek agora, the English commons, the American town square—places where people gather, exchange ideas, build relationships, and shape culture. It's a fitting symbol for the infrastructure enabling the next leap in human coordination and creativity.

From the Mughal and Edo periods to the Flemish and Italian Renaissance, our brand imagery draws from different cultures and eras of extraordinary human flourishing—periods when science, commerce, culture and technology converged to create unprecedented leaps forward. These visuals reflect both the universal nature of the Renaissance and the global reach of our network.

But we're not just celebrating the past —we're creating the future: the infrastructure for humanity's next great creative and technological awakening, powered by privacy-native blockchain technology.

Join us to ask questions, learn more and dive into the lore.

Join Our Discord Town Hall. September 4th at 8 AM PT, then every Thursday at 7 AM PT. Come hear directly from our team, ask questions, and connect with other builders who are shaping the future of privacy-first applications.

Take your stance on privacy. Visit the privacy glyph generator to create your custom profile pic and build this new world with us.

Stay Connected. Visit the new website and to stay up-to-date on all things Noir and Aztec, make sure you’re following along on X.

The next renaissance is what you build on Aztec—and we can't wait to see what you'll create.

Aztec’s Public Testnet launched in May 2025.

Since then, we’ve been obsessively working toward our ultimate goal: launching the first fully decentralized privacy-preserving layer-2 (L2) network on Ethereum. This effort has involved a team of over 70 people, including world-renowned cryptographers and builders, with extensive collaboration from the Aztec community.

To make something private is one thing, but to also make it decentralized is another. Privacy is only half of the story. Every component of the Aztec Network will be decentralized from day one because decentralization is the foundation that allows privacy to be enforced by code, not by trust. This includes sequencers, which order and validate transactions, provers, which create privacy-preserving cryptographic proofs, and settlement on Ethereum, which finalizes transactions on the secure Ethereum mainnet to ensure trust and immutability.

Strong progress is being made by the community toward full decentralization. The Aztec Network now includes nearly 1,000 sequencers in its validator set, with 15,000 nodes spread across more than 50 countries on six continents. With this globally distributed network in place, the Aztec Network is ready for users to stress test and challenge its resilience.

We're now entering a new phase: the Adversarial Testnet. This stage will test the resilience of the Aztec Testnet and its decentralization mechanisms.

The Adversarial Testnet introduces two key features: slashing, which penalizes validators for malicious or negligent behavior in Proof-of-Stake (PoS) networks, and a fully decentralized governance mechanism for protocol upgrades.

This phase will also simulate network attacks to test its ability to recover independently, ensuring it could continue to operate even if the core team and servers disappeared (see more on Vitalik’s “walkaway test” here). It also opens the validator set to more people using ZKPassport, a private identity verification app, to verify their identity online.

The Aztec Network testnet is decentralized, run by a permissionless network of sequencers.

The slashing upgrade tests one of the most fundamental mechanisms for removing inactive or malicious sequencers from the validator set, an essential step toward strengthening decentralization.

Similar to Ethereum, on the Aztec Network, any inactive or malicious sequencers will be slashed and removed from the validator set. Sequencers will be able to slash any validator that makes no attestations for an entire epoch or proposes an invalid block.

Three slashes will result in being removed from the validator set. Sequencers may rejoin the validator set at any time after getting slashed; they just need to rejoin the queue.

In addition to testing network resilience when validators go offline and evaluating the slashing mechanisms, the Adversarial Testnet will also assess the robustness of the network’s decentralized governance during protocol upgrades.

Adversarial Testnet introduces changes to Aztec Network’s governance system.

Sequencers now have an even more central role, as they are the sole actors permitted to deposit assets into the Governance contract.

After the upgrade is defined and the proposed contracts are deployed, sequencers will vote on and implement the upgrade independently, without any involvement from Aztec Labs and/or the Aztec Foundation.

Starting today, you can join the Adversarial Testnet to help battle-test Aztec’s decentralization and security. Anyone can compete in six categories for a chance to win exclusive Aztec swag, be featured on the Aztec X account, and earn a DappNode. The six challenge categories include:

Performance will be tracked using Dashtec, a community-built dashboard that pulls data from publicly available sources. Dashtec displays a weighted score of your validator performance, which may be used to evaluate challenges and award prizes.

The dashboard offers detailed insights into sequencer performance through a stunning UI, allowing users to see exactly who is in the current validator set and providing a block-by-block view of every action taken by sequencers.

To join the validator set and start tracking your performance, click here. Join us on Thursday, July 31, 2025, at 4 pm CET on Discord for a Town Hall to hear more about the challenges and prizes. Who knows, we might even drop some alpha.

To stay up-to-date on all things Noir and Aztec, make sure you’re following along on X.

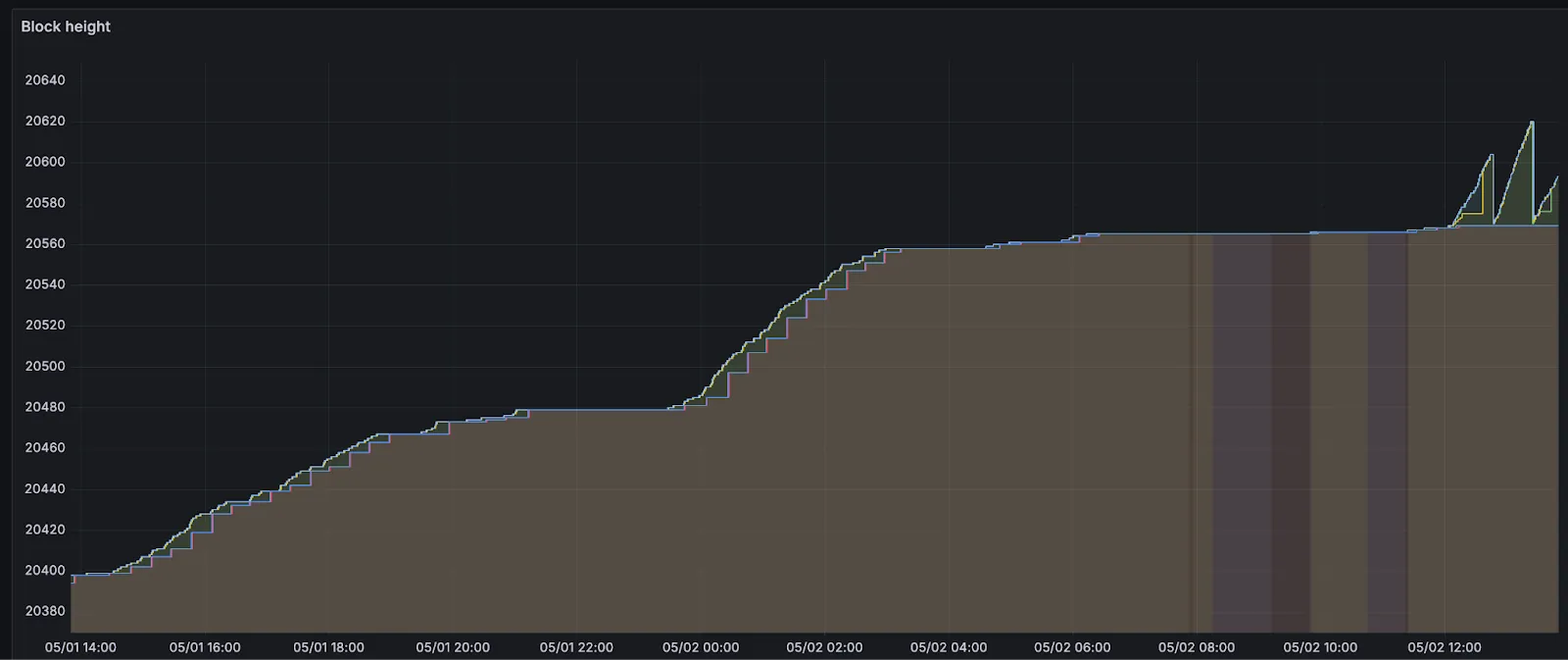

On May 1st, 2025, Aztec Public Testnet went live.

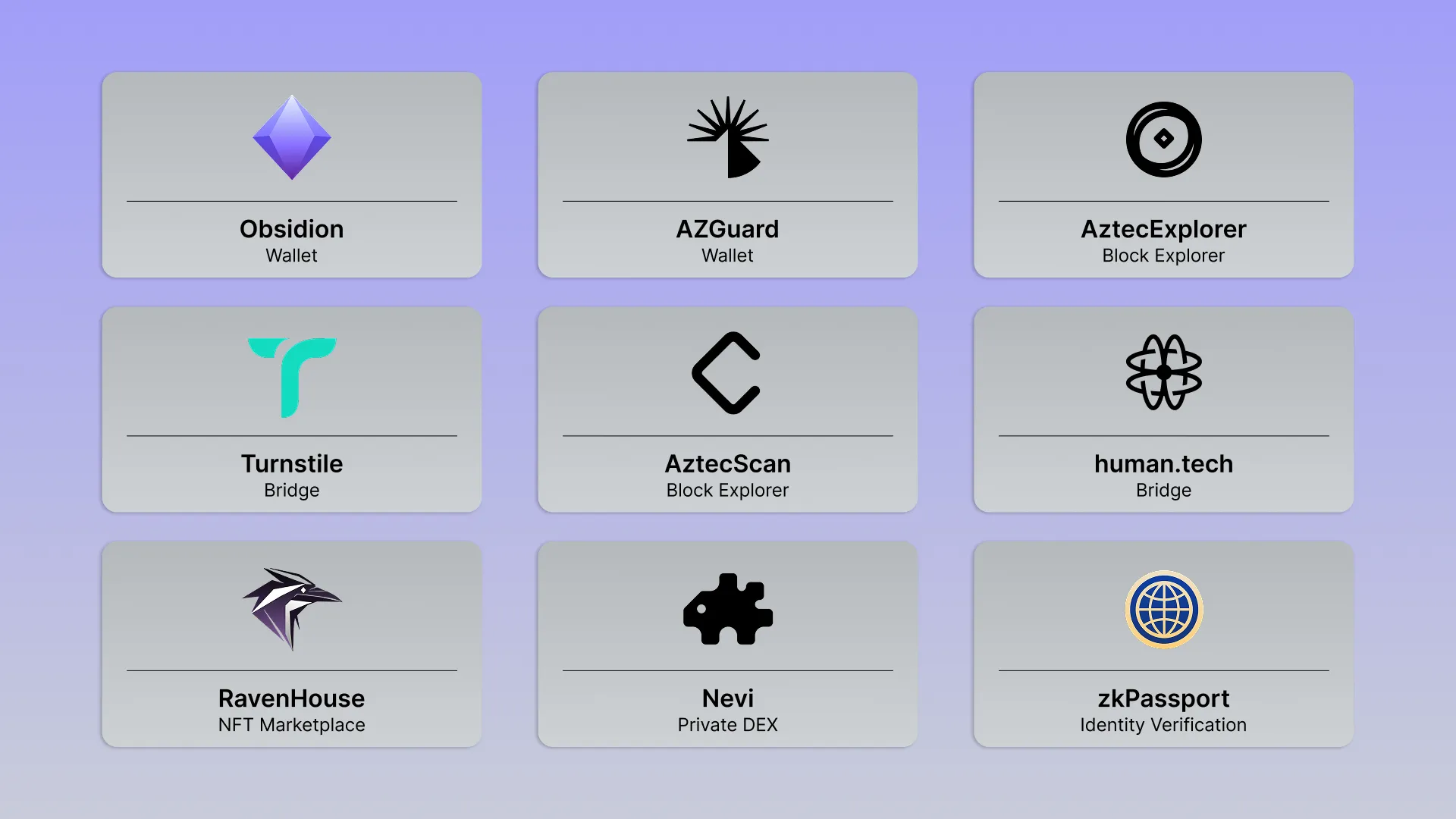

Within the first 24 hours, over 20k users visited the Aztec Playground and started to send transactions on testnet. Additionally, 10 apps launched live on the testnet, including wallets, block explorers, and private DeFi and NFT marketplaces. Launching a decentralized testnet poses significant challenges, and we’re proud that the network has continued to run despite high levels of congestion that led to slow block production for a period of time.

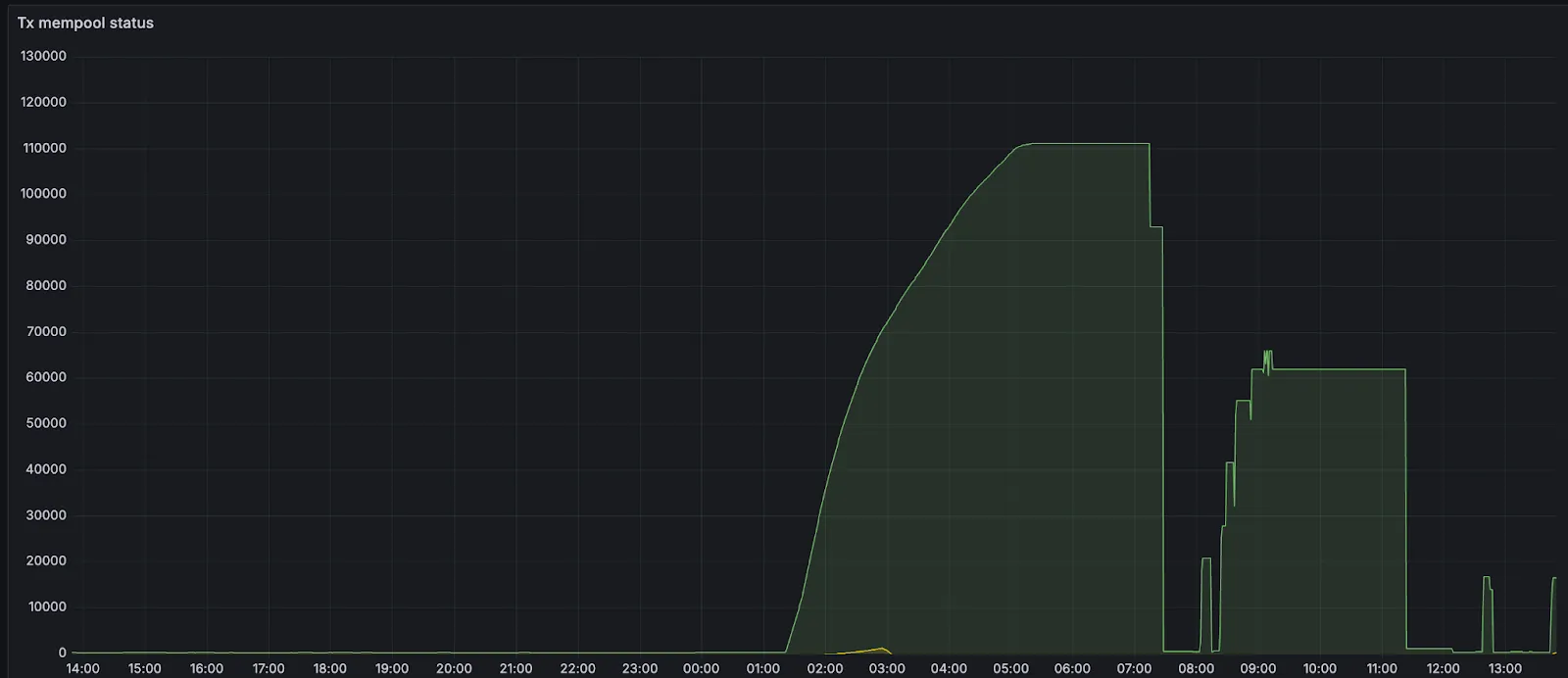

Around 6 hours after announcing the network launch, more than 150 sequencers had joined the validator set to sequence transactions and propose blocks for the network. 500+ additional full nodes were spun up by node operators participating in our Discord community. These sequencers were flooded with over 5k transactions before block production slowed. Let’s dive into why block production slowed down.

On Aztec, an epoch is a group of 32 blocks that are rolled up for settlement on Ethereum. Leading up to the slowdown of block production, there were entire epochs with full blocks (8 transactions, or 0.2TPS) in every slot. The sequencers were building blocks and absorbing the demand for blockspace from users of the Aztec playground, and there was a build up of 100s of pending transactions in sequencer mempools.

Issues arose when these transactions started to exceed the mempool size, which was configured to hold only 100mb or about 700 transactions.

As many new validators were brought through the funnel and started to come online, the mempools of existing validators (already full at 700 transactions) and new ones (at 0 transactions) diverged significantly. When earlier validators proposed blocks, newer validators didn't have the transactions and could not attest to blocks because the request/response protocol wasn't aggressive enough. When newer validators made proposals, earlier validators didn't have transactions (their mempools were full), so they could not attest to blocks.

New validators then started to build up pending transactions. When validators with full mempools requested missing transactions from peers, they would evict existing transactions from their mempools (mempool is at max memory) based on priority fee. All transactions had default fee settings, so validators were randomly ejecting transactions and were not doing so in lockstep (different validators ejected different transactions). For a little over an hour, the mempools diverged significantly from each other, and block production slowed down to about 20% of the expected rate.

In order to stop the mempool from ejecting transactions, the p2p mempool size was increased. By increasing the mempool size, the likelihood of needing to evict transactions that might soon appear in proposals is reduced. This increases the chances that sequencers already have the necessary transactions locally when they receive a block proposal. As a result, more validators are able to attest to proposals, allowing blocks to be finalized more reliably. Once blocks are included on L1, their transactions are evicted from the mempool. So over time, as more blocks are finalized and transactions are mined, the mempool naturally shrinks and the network will recover on its own.

If you are interested in running a sequencer node visit the sequencer page. Stay up-to-date on Noir and Aztec by following Noir and Aztec on X.

When Aztec first got started, the world of zero-knowledge proving systems and applications was in its infancy. There was no PLONK, no Noir, no programmable privacy, and it wasn’t clear that demand for onchain privacy was even strong enough to necessitate a new blockchain network.

After a decade of building, revolutionary breakthroughs in privacy technology have paved the way to, and now set the stage for, mainnet including: PLONK, a novel proving system for user-level privacy and programmability that yielded zk.money and Aztec Connect, which was a pivotal moment for privacy and encryption solutions; Noir, an intuitive zero-knowledge, Rust-like programming language; and a client-side library for a private execution environment (PXE). These tools allow developers to explore privacy-preserving applications across any use case where protecting sensitive data is a critical function.

In 2023 and 2024 Aztec was named by Electric Capital as one of the fastest-growing developer ecosystems. The next generation of applications on Ethereum are already being built using parts of the Aztec stack, like Noir. Projects such as zkPassport and zkEmail are unlocking key identity use cases, while other applications like Anoncast (built in one weekend) have caught the attention of heavyweights like Vitalik Buterin and Laura Shin.

Earlier this month, we announced the successful testing of the first decentralized upgrade process for an L2, with over 100 sequencers participating. Now, with the mission to bring programmable privacy to the masses, the Aztec Public Testnet is here and, for the first time ever, open to developers to build fully private applications on Ethereum.

The Aztec Network will launch fully decentralized from day one.

Not because it’s a flex, but because true privacy can only be achieved when there is no central entity that has potential backdoor access.

Imagine logging into your hot wallet using web2 auth with Google or iCloud, or proving you’re a U.S. citizen onchain without revealing your passport information. For this, you need onchain privacy, and true privacy needs full decentralization so the user can maintain control over their data.

This is the vision for the Aztec Network.

Like Zac, our CEO and Co-founder, said in his talk Privacy: The Missing Link, “there are three fundamental attributes required to bridge the gap and bring the world onchain: interfacing with web2 systems, linking accounts to identities, and establishing digital sovereignty.”

Launching a decentralized network is a complex task filled with lots of intricacies and nuances to navigate. The Aztec Public Testnet plays a crucial role in stress-testing the network, identifying early issues, and ensuring its participants work as intended – ultimately leading to a more robust mainnet.

There are two ways you can participate in the network: as a developer who wants to build and deploy applications (with end-to-end privacy) or as a node operator powering the network.

Aztec enables developers to build with both private and public state.

Smart contracts on Aztec blend private functions that execute on the client side with public functions that are executed by sequencers on the Aztec Network. This allows you to customize your contract with both public and private components while deploying them to a fully decentralized network.

The fastest way to get started with the Aztec Public Testnet is to deploy a smart contract using the Playground. If you’re a developer, visit our dev landing page to connect to Testnet and deploy on the Aztec Network.

The Aztec Network is run by a decentralized sequencer and prover network.

Sequencers propose and produce blocks using consumer hardware and are responsible for proposing and voting on network upgrades. Provers participate in a decentralized prover network and are selected to prove the rollup integrity.

No airdrops. No marketing gimmicks. We just want to create a community of highly skilled operators who share the vision of a fully decentralized privacy-preserving network. Anyone can boot up a sequencer node and access the testnet faucet. See the sequencer quickstart to get started. Apply to get a special Discord role and peer support from experienced node operators leading the Aztec Network.

To see existing applications and get inspo for what you want to build on the Aztec Public Testnet, check out our Ecosystem page. If you’ve already built an app and would like to be featured, submit your app here.

Next, head to the Playground to try out the Aztec Public Testnet, where you can deploy and interact with privacy-preserving smart contracts. Tools and infrastructure to start building wallets, bridges, and explorers are already available.

If you’re a developer, click ➡️ here to get started and deploy your smart contract in literal minutes.

If you’re a node operator, click ➡️ here to set up and run a node.

Stay up-to-date on Noir and Aztec by following Noir and Aztec on X.

Aztec will be a fully decentralized, permissionless and privacy-preserving L2 on Ethereum. The purpose of Aztec’s Public Testnet is to test all the decentralization mechanisms needed to launch a strong and decentralized mainnet. In this post, we’ll explore what full decentralization means, how the Aztec Foundation is testing each aspect in the Public Testnet, and the challenges and limitations of testing a decentralized network in a testnet environment.

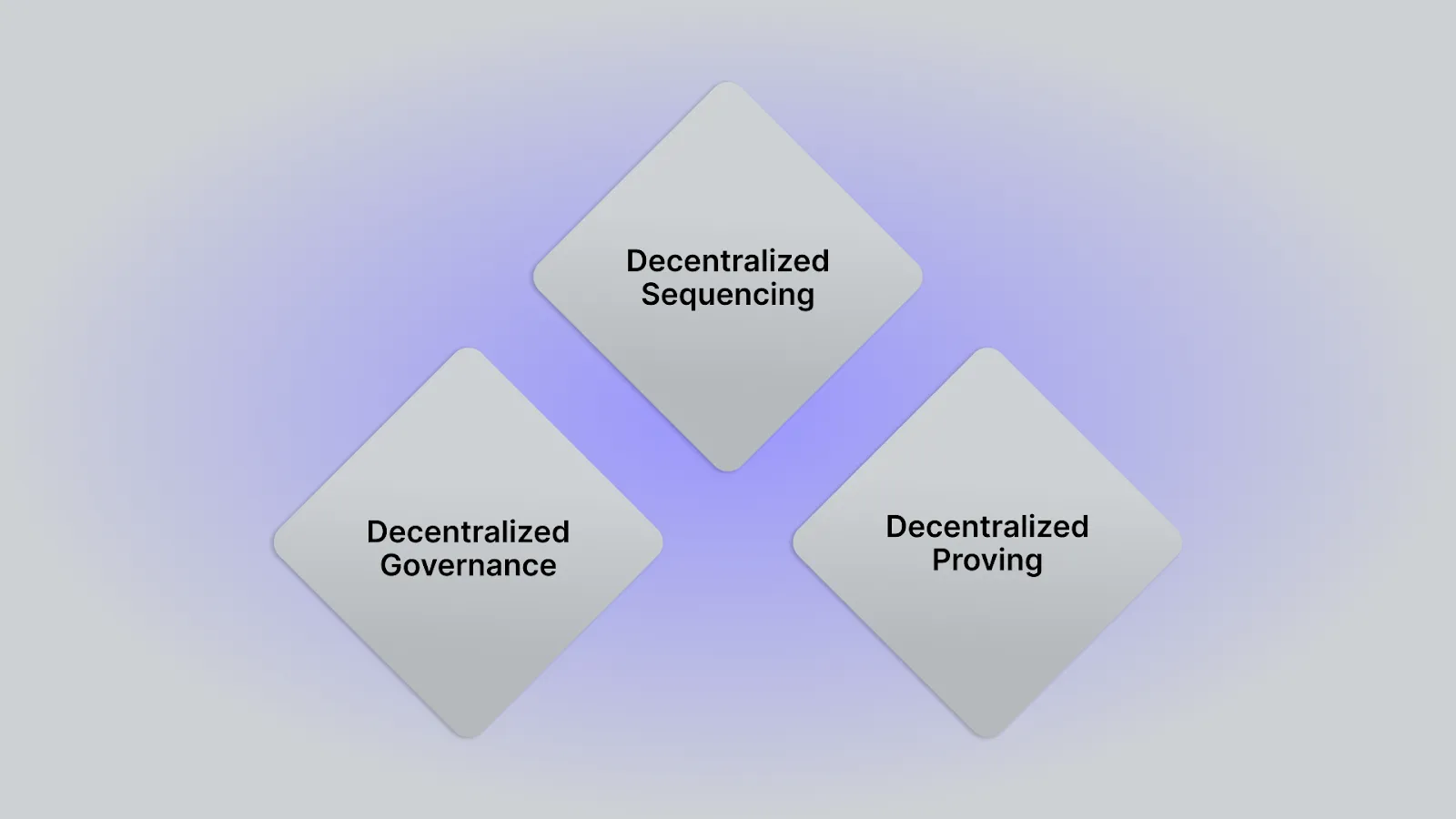

Three requirements must be met to achieve decentralization for any zero-knowledge L2 network:

Decentralization across sequencing, proving, and governance is essential to ensure that no single party can control or censor the network. Decentralized sequencing guarantees open participation in block production, while decentralized proving ensures that block validation remains trustless and resilient, and finally, decentralized governance empowers the community to guide network evolution without centralized control.

Together, these pillars secure the rollup’s autonomy and long-term trustworthiness. Let’s explore how Aztec’s Public Testnet is testing the implementation of each of these aspects.

Aztec will launch with a fully decentralized sequencer network.

This means that anyone can run a sequencer node and start sequencing transactions, proposing blocks to L1 and validating blocks built by other sequencers. The sequencer network is a proof-of-stake (PoS) network like Ethereum, but differs in an important way. Rather than broadcasting blocks to every sequencer, Aztec blocks are validated by a randomly chosen set of 48 sequencers. In order for a block to be added to the L2 chain, two-thirds of the sequencers need to verify the block. This offers users fast preconfirmations, meaning the Aztec Network can sequence transactions faster while utilizing Ethereum for final settlement security.

PoS is fundamentally an anti-sybil mechanism—it works by giving economic weight to participation and slashing malicious actors. At the time of Aztec’s mainnet, this will allow sequencers to vote out bad actors and burn their staked assets. On the Public Testnet, where there are no real economic incentives, PoS doesn't function properly. To address this, we introduced a queue system that limits how quickly new sequencers can join, helping to maintain network health and giving the network time to react to potential malicious behavior.

Behind the scenes, a contract handles sequencer onboarding—it mints staking assets, adds sequencers to the set, and can remove them if necessary. This contract is just for Public Testnet and will be removed on Mainnet, allowing us to simulate and test the decentralized sequencing mechanisms safely.

Aztec will also launch with a fully decentralized prover network.

Provers generate cryptographic proofs that verify the correctness of public transactions, culminating in a single rollup proof submitted to Ethereum. Decentralized proving reduces centralization risk and liveness failures, but also opens up a marketplace to incentivize fast and efficient proof generation. The proving client developed by Aztec Labs involves three components:

Once the final proof has been computed, the proving node sends the proof to L1 for verification. The Aztec Network splits proving rewards amongst everyone who submits a proof on time, reducing centralization risk where one entity with large compute dominates the network.

For Aztec’s Public Testnet, anyone can spin up a prover node and start generating proofs. Running a prover node is more hardware-intensive than running a sequencer node, requiring ~40 machines with an estimated 16 cores and 128GB RAM each. Because running provers can be cost-intensive and incur the same costs on a testnet as it will on mainnet, Aztec’s Public Testnet will throttle transactions to 0.2 per second (TPS).

Keeping transaction volumes low allows us to test a fully decentralized prover network without overwhelming participating provers with high costs before real network incentives are in place.

Finally, Aztec will launch with fully decentralized governance.

In order for network upgrades to occur, anyone can put forward a proposal for sequencers to consider. If a majority of sequencers signal their support, the proposal gets sent to a vote. Once it passes the vote, anyone can execute the script that will implement the upgrade. Note: For this testnet, the second phase of voting will be skipped.

Decentralized governance is an important step in enabling anyone to participate in shaping the future of the network. The goal of the public testnet is to ensure the mechanisms are functioning properly for sequencers to permissionlessly join and control the Aztec Network from day 1.

One additional aspect to consider with regard to full decentralization is the role of network users in decentralizing the compute load of the network.

Aztec Labs has developed groundbreaking technology to make end-to-end programmable privacy possible. First with the release of Plonk, and later refinements like MegaHonk, which make it feasible to generate client-side ZKPs. Client-side proofs keep sensitive data on the user’s device while still enabling users to interact with and store this information privately onchain. They also help to scale throughput by pushing execution to users. This decentralizes the compute requirements and means users can execute arbitrary logic in their private functions.

Sequencers and provers on the Aztec Network are never able to see any information that users or applications want to keep private, including accounts, activity, balances, function execution, or other data of any kind.

Aztec’s Public Testnet is shipping with a full execution environment, including the ability to create client-side proofs in the browser. Here are some time estimations to expect for generating private, client-side proofs:

Aztec’s Public Testnet is designed to rigorously test decentralization across sequencing, proving, and governance ahead of our mainnet launch. The network design ensures no single entity can control or censor activity, empowering anyone to participate in sequencing transactions, generating proofs, and proposing governance changes.

Visit the Aztec Testnet page to start building with programmable privacy and join our community on Discord.

When Aztec mainnet launches, it will be the first fully private and decentralized L2 on Ethereum. Getting here was a long road: when Aztec started eight years ago, the initial plan was to build an onchain financial service called CreditMint for issuing corporate debt to mid-market enterprises – obviously a distant use case from how we understand Aztec today. When co-founders Zac Williamson, Joe Andrews, Tom Pocock, and Arnaud Schenk, got started, the world of zero-knowledge proving systems and applications weren’t even in their infancy: there was no PLONK, no Noir, no programmable privacy, and it wasn’t clear that demand for onchain privacy was even strong enough to necessitate a new blockchain network. The founders’ initial explorations through CreditMint led to what we know as Aztec today.

While putting corporate debt onchain might seem unglamorous (or just limited compared with how we now understand Aztec’s capabilities), it was useful, wildly popular, and necessary for the founding team to realized that no serious institution wanted to touch the blockchain without the same privacy assurances that they were accustomed to in the corporate world. Traditional finance is built around trusted intermediaries and middlemen, which of course introduces friction and bottlenecks progress – but offers more privacy assurances than what you see on public blockchains like Ethereum.

This takeaway led to a bigger understanding: the number of people (not just the number of institutions) who wanted to use the blockchain was limited by a lack of programmable privacy. Aztec was born out of the recognition that everyone – not only corporations – could use permissionless, onchain systems for private transactions, and this could become the default for all online payments. In the words of the CEO, Zac Williamson:

“If you had programmable digital money that had privacy guarantees around it, you could use that to create extremely fast permissionless payment channels for payments on the internet.”

Equipped with this understanding, Zac and Joe began to specialize. Zac, whose background is in particle physics, went deep on cryptography research and began exploring protocols that could be used to enable onchain privacy. Meanwhile, Joe worked on how to get user adoption for privacy tech, while Arnaud focused on getting the initial CreditMint platform live and recruiting early members of the team. In 2018, Aztec published a proof-of-concept transaction demonstrating the creation and transfer of private assets on Ethereum – using an early cryptographic protocol that predated modern proving schemes like PLONK. It was a limited example, with just DAI as the test-case (and it could only facilitate private assets, not private identities), but it garnered a lot of early interest from members of the Ethereum community.

The 2018 version of the Aztec Protocol had three key limitations: it wasn’t programmable, it only supported private data (rather than private data and user-level privacy), and it was expensive, from both a computation and gas perspective. The underlying proving scheme was, in the words of Zac, a “Frankenstein cryptography protocol using older primitives than zk-SNARKs.” These limitations motivated the development of PLONK in 2019, a SNARK-based proving system that is computationally inexpensive, and only requires one universal trusted setup.

A single universal trusted setup is desirable because it allows developers to utilize a common reference string for all of the programs they might want to instantiate in a circuit; the alternative is a much more cumbersome process of conducting a trusted setup ceremony for each cryptographic circuit. In other words, PLONK enabled programmable privacy for future versions of Aztec.

PLONK was a big breakthrough, not just for Aztec, but for the wider blockchain community. Today, PLONK has been implemented and extended by teams like zkSync, Polygon, Mina, and more. There is even an entire category of proving systems called PLONKish that all derive from the original 2019 paper. For Aztec specifically, PLONK was also instrumental in paving the way for zk.money and Aztec Connect, a private payment network and private DeFi rollup, which launched in 2021 and 2022 respectively.

The product needs of Aztec motivated the development of a modern-day proving system. PLONK proofs are computationally cheap to generate, leading not only to lower transaction costs and programmability for developers, but big steps forward for privacy and decentralization. PLONK made it simpler to generate client-side proofs on inexpensive hardware. In the words of Joe, “PLONK [was] developed to keep the middleman away.”

Between 2021 and 2023, the Aztec team operated zk.money and Aztec Connect. The products were not only vital in illustrating that there was a demand for onchain privacy solutions, but in demonstrating that it was possible to build performant and private networks leveraging PLONK. Joe remarked that they “wanted to test that we could build a viable payments network, where the user experience was on par with a public transaction. Privacy needed to be in the background.”

Aztec’s early products indicated that there was significant demand for private onchain payments and DeFi – at peak, the rollups had over $20 million in TVL. Both products fit into the vision Zac had to “make the blockchain real.” In his team’s eyes, blockchains are held back from mainstream adoption because you can’t bring consequential, real-world assets onchain without privacy.

Despite the demand for these networks, the team made the decision to sunset both zk.money and Aztec Connect after recognizing that they could not fully decentralize the networks without massive architectural changes. Zac and Joe don’t believe in “Progressive Decentralization” – the network needs to have no centralized operators from day one. And it wasn’t just the sequencer of these early Aztec products that were centralized – the team also recognized that it would have been impossible for other developers to write programs on Aztec that could compose with each other, because all programs operated on shared state. In 2023, zk.money and Aztec Connect were officially shut down.

In tandem, the team also began developing Noir (an original brainchild of Kevaundray Wedderbaum). Noir is a Rust-like programming language for writing zero-knowledge circuits that makes privacy technology accessible to mainstream developers. While Noir began as a way to make it easier for developers to write private programs without needing to know cryptography, the team soon realized that the demand for privacy didn’t just apply to applications on the Aztec stack, and that Noir could be a general-purpose DSL for any kind of application that needs to leverage privacy. In the same way that bringing consequential assets and activity onchain “makes the blockchain real,” bringing zero-knowledge technology to any application – onchain or offchain – makes privacy real. The team continued working on Noir, and it has developed into its own product stack today.

Aztec from 2017 to 2024 can be seen as a methodical journey toward building a fully private, programmable, and decentralized blockchain network. The earliest attempt at Aztec as a protocol introduced asset-level privacy, without addressing user-level privacy, or significant programmability. PLONK paved the way for user-level privacy and programmability, which yielded zk.money and Aztec Connect. Noir extended programmability even further, making it easy for developers to build applications in zero-knowledge. But zk.money and Aztec Connect were incomplete without a viable path to decentralization. So, the team decided to build a new network from scratch. Extending on their learnings from past networks, the foundations and findings from continuous R&D efforts of PLONK, and the growing developer community around Noir, they set the stage for Aztec mainnet.

The fact of the matter is that creating a network that is fully private and decentralized is hard. To have privacy, all data must be shielded cheaply inside of a SNARK. If you want to really embrace the idea of “making the blockchain real” then you should also be able to leverage outside authentication and identity solutions, like Apple ID – and you need to be able to put those technologies inside of a SNARK as well. The number of statements that need to be represented as provable circuits is massive. Then, all of these capabilities need to run inside of a network that is decentralized. The combination of mathematical, technological, and networking problems makes this very difficult to achieve

The technical architecture of Aztec reflects the learnings of the Aztec team. Zac describes Aztec mainnet as a “Russian nesting doll” of products that all add up to a private and decentralized network. Aztec today consists of:

At the network level, there will be many participants in the decentralization efforts of Aztec: provers, sequencers, and node operators. Joe views the infrastructure-level decentralization as a crucial first stage of Aztec’s mainnet launch.

As Aztec goes live, the vision extends beyond private transactions to enabling entirely new categories of applications. The team envisions use cases ranging from consumer lending based on private credit scores to games leveraging information asymmetry, to social applications that preserve user privacy. The next phase will focus on building a robust ecosystem of developers and the next generation of applications on Ethereum using Noir, the universal language of privacy.

Aztec mainnet marks the emergence of applications that weren't possible before – applications that combine the transparency and programmability of blockchain with the privacy necessary for real-world adoption.

Many thanks to Remi Gai, Hannes Huitula, Giacomo Corrias, Avishay Yanai, Santiago Palladino, ais, ji xueqian, Brecht Devos, Maciej Kalka, Chris Bender, Alex, Lukas Helminger, Dominik Schmid, 0xCrayon, Zac Williamson for inputs, discussions, and reviews.

Contents

Prerequisites:

Buzzwords are dangerous. They amuse and fascinate as cutting-edge, innovative, mesmerizing markers of new ideas and emerging mindsets. Even better if they are abbreviations, insider shorthand we can use to make ourselves look smarter and more progressive:

Using buzzwords can obfuscate the real scope and technical possibilities of technology. Furthermore, buzzwords might act as a gatekeeper making simple things look complex, or on the contrary, making complex things look simple (according to the Dunning-Kruger effect).

In this article, we will briefly review several suggested privacy-related abbreviations, their strong points, and their constraints. And after that, we’ll think about whether someone will benefit from combining them or not. We’ll look at different configurations and combinations.

Disclaimer: It’s not fair to compare the technologies we’re discussing since it won’t be an apples-to-apples comparison. The goal is to briefly describe each of them, highlighting their strong and weak points. Understanding this, we will be able to make some suggestions about combining these technologies in a meaningful way.

POV: a new dev enters the space.

Client-side ZKP is a specific category of zero-knowledge proofs (started in 1989). The exploration of general ZKPs in great depth is out-of-scope for this piece. If you're curious to learn about it, check this article.

Essentially, zero-knowledge protocol allows one party (prover) to prove to another party (verifier) that some given statement is true, while avoiding conveying any information beyond the mere fact of that statement's truth.

Client-side ZKPs enable generation of the proof on a user's device for the sake of privacy. A user makes some arbitrary computations and generates proof that whatever they computed was computed correctly. Then, this proof can be verified and utilized by external parties.

One of the most widely known use cases of the client-side ZKPs is a privacy preserving L2 on Ethereum where, thanks to client-side data processing, some functions and values in a smart-contract can be executed privately, while the rest are executed publicly. In this case, the client-side ZKP is generated by the user executing the transaction, then verified by the network sequencer.

However, client-side proof generation is not limited to Ethereum L2s, nor to blockchain at all. Whenever there are two or more parties who want to compute something privately and then verify each other’s computation and utilize their results for some public protocols, client-side ZKPs will be a good fit.

Check this article for more details on how client-side ZKPs work.

The main concern today about on-chain privacy by means of client-side proof generation is the lack of a private shared state. Potentially, it can be mitigated with an MPC committee (which we will cover in later sections).

Speaking of limitations of client-side proving, one should consider:

What can we do with client-side ZKPs today:

Whom to follow for client-side ZKPs updates: Aztec Labs, Miden, Aleo.

Disclaimer: in this section, we discuss general-purpose MPC (i.e. allowing computations on arbitrary functions). There are also a bunch of specialized MPC protocols optimized for various use cases (i.e. designing customized functions) but those are out-of-scope for this article.

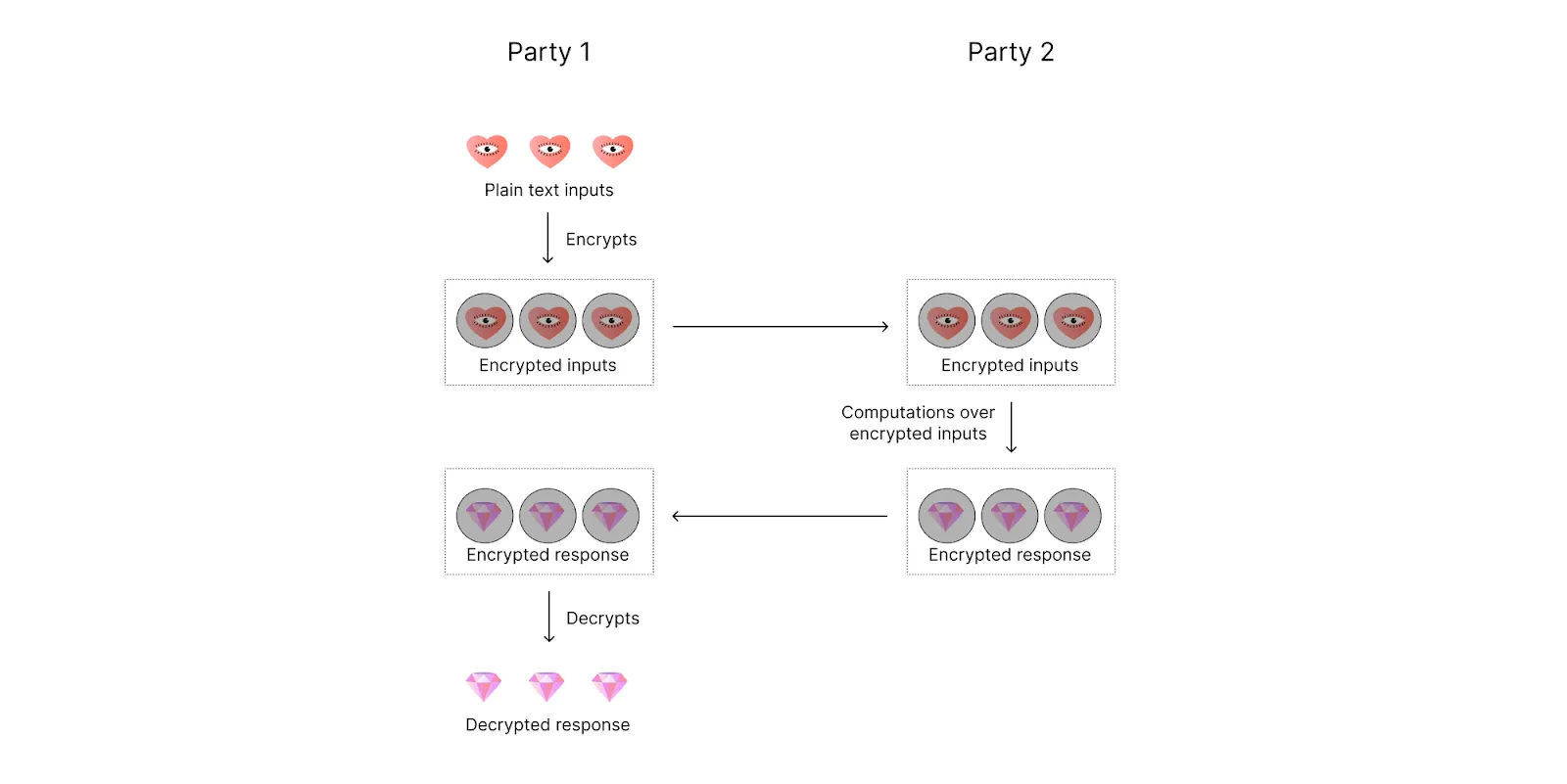

MPC enables a set of parties to interact and compute a joint function of their private inputs while revealing nothing but the output: f(input_1, input_2, …, input_n) → output.

For example, parties can be servers that hold a distributed database system and the function can be the database update. Or parties can be several people jointly managing a private key from an Ethereum account and the function can be a transaction signing mechanism.

One issue of concern with MPCs is that one or more parties participating in the protocol can be malicious. They can try to:

Hence in the context of MPC security, one wants to ensure that:

To think about MPC security in an exhaustive way, we should consider three perspectives:

Rather than requiring all parties in the computation to remain honest, MPC tolerates different levels of corruption depending on the underlying assumptions. Some models remain secure if less than 1/3 of parties are corrupt, some if less than 1/2 are corrupt, and some even have security guarantees even in the case that more than half of the parties are corrupt. For details, formal definition, and proof of MPC protocol security, check this paper.

There are three main corruption strategies:

Each of these assumptions will assume a different security model.

Two definitions of malicious behavior are:

When it comes to the definition of privacy, MPC guarantees that the computation process itself doesn’t reveal any information. However, it doesn’t guarantee that the output won’t reveal any information. For an extreme example, consider two people computing the average of their salaries. While it’s true that nothing but the average will be output, when each participant knows their own salary amount and the average of both salaries, they can derive the exact salary of the other person.

That is to say, while the core “value proposition” of MPC seems to be very attractive for a wide range of real world use cases, a whole bunch of nuances should be taken into account before it will actually provide a high enough security level. (It's important to clarify the problem statement and decide whether it is the right tool for this particular task.)

What can be done with MPC protocols today:

When we think about MPC performance, we should consider the following parameters: number of participating parties, witness size of each party, and function complexity.

When it comes to using MPC in blockchain context, it’s important to consider message complexity, computational complexity, and such properties as public verifiability and abort identifiability (i.e. if a malicious party causes the protocol to prematurely halt, then they can be detected). For message distribution, the protocol relies either on P2P channels between each two parties (requires a large bandwidth) or broadcasting. Another concern arises around the permissionless nature of blockchain since MPC protocols often operate over permissioned sets of nodes.

Taking into account all that, it’s clear that MPC is a very nuanced technology on its own. And it becomes even more nuanced when combined with other technologies. Adding MPC to a specific blockchain protocol often requires designing a custom MPC protocol that will fit. And that design process often requires a room full of MPC PhDs who can not only design but also prove its security.

Whom to follow for MPC updates: dWallet Labs, TACEO, Fireblocks, Cursive, PSE, Fairblock, Soda Labs, Silence Laboratories, Nillion.

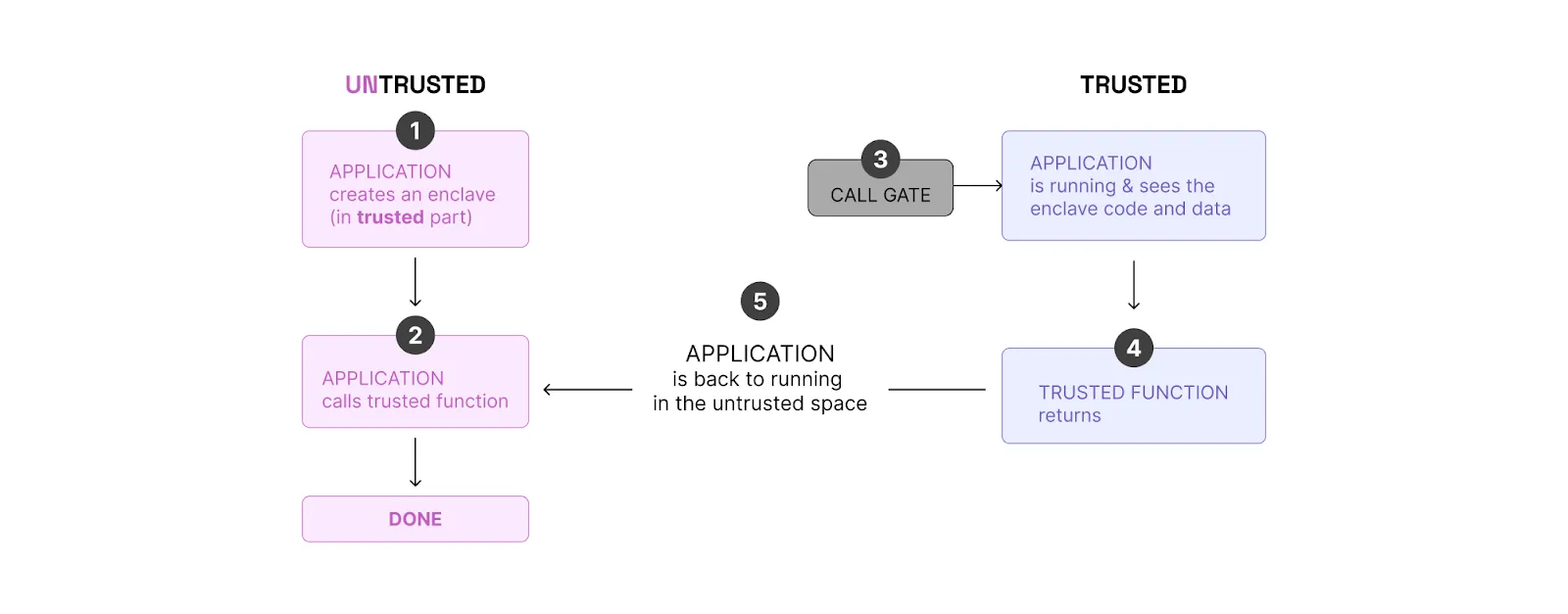

TEE stands for Trusted Execution Environment. TEE is an area on the main processor of a device that is separated from the system's main operating system (OS). It ensures data is stored, processed, and protected in a separate environment. One of the most widely known units of TEE (and one we often mention when discussing blockchain) is Software Guard Extensions (SGX) made by Intel.

SGX can be considered a type of private execution. For example, if a smart contract is run inside SGX, it’s executed privately.

SGX creates a non-addressable memory region of code and data (separated from RAM), and encrypts both at a hardware level.

How SGX works:

It’s worth noting that there is a key pair: a secret key and a public key. The secret key is generated inside of the enclave and never leaves it. The public key is available to anyone: Users can encrypt a message using a public key so only the enclave can decrypt it.

An SGX feature often utilized in the blockchain context is attestations. Attestation is the process of demonstrating that a software executable has been properly instantiated on a platform. Remote Attestation allows a remote party to be confident that the intended software is securely running within an enclave on a fully patched, Intel SGX-enabled platform.

Core SGX concerns:

Speaking of SGX cost, the proof generation cost can be considered free of charge. Though if one wants to use remote attestations, the initial one-time cost (once per SGX prover) for it is in the order of 1M gas (to make sure the code in SGX is running in the expected way).

Onchain verification cost equals to verifying an ECDSA signature (~5k gas while for ZK signature verification will cost ~300k gas).

When it comes to execution time, there is effectively no overhead. For example, for proving a zk-rollup block, it will be around 100ms.

Where SGX is utilized in blockchain today:

Whom to follow for TEE updates: Secret Network, Flashbots, Andrew Miller, Oasis, Phala, Marlin, Automata, TEN.

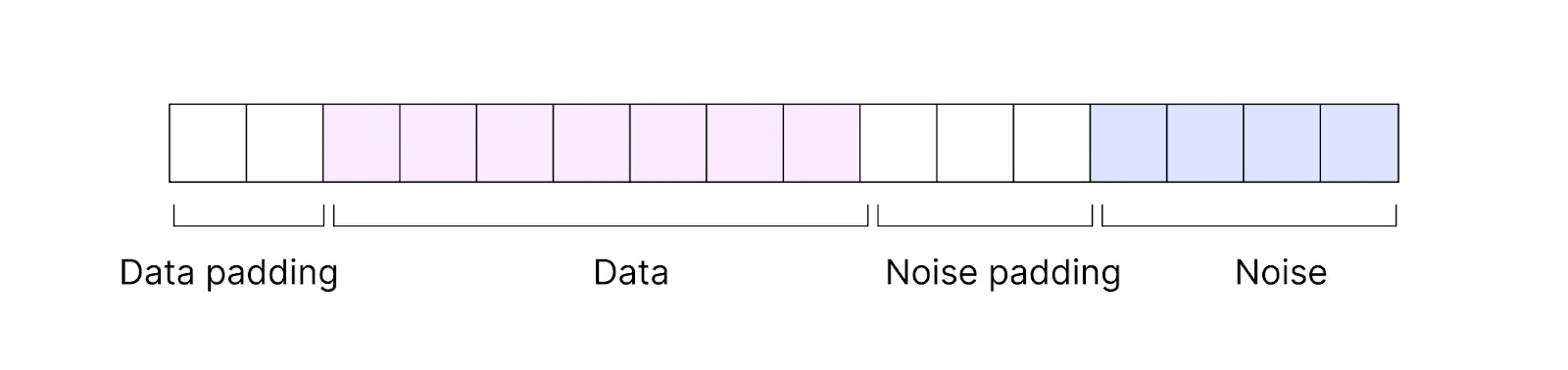

FHE enables encrypted data processing (i.e. computation on encrypted data).

The idea of FHE was proposed in 1978 by Rivest, Adleman, and Dertouzos. “Fully” means that both addition and multiplication can be performed on encrypted data. Let m be some plain text and E(m) be an encrypted text (ciphertext). Then additive homomorphism is E(m_1 + m_2) = E(m_1) + E(m_2) and multiplicative homomorphism is E(m_1 * m_2) = E(m_1) * E(m_2).

Additive Homomorphic Encryption was used for a while, but Multiplicative Homomorphic Encryption was still an issue. In 2009, Craig Gentry came up with the idea to use ideal lattices to tackle this problem. That made it possible to do both addition and multiplication, although it also made growing noise an issue.

How FHE works:

Plain text is encoded into ciphertext. Ciphertext consists of encrypted data and some noise.

That means when computations are done on ciphertext, they are done not purely on data but on data together with added noise. With each performed operation, the noise increases. After several operations, it starts overflowing on the bits of actual data, which might lead to incorrect results.

A number of tricks were proposed later on to handle the noise and make the FHE work more reliably. One of the most well-known tricks was bootstrapping, a special operation that reset the noise to its nominal level. However, bootstrapping is slow and costly (both in terms of memory consumption and computational cost).

Researchers rolled out even more workarounds to make bootstrapping efficient and took FHE several more steps forward. Further details are out-of-scope for this article, but if you’re interested in FHE history, check out this talk by mathematician Zvika Brakerski.

Core FHE concerns:

Compared to computations on plain text, the best per-operation overhead available today is polylogarithmic [GHS12b] where if n is the input size, by polylogarithmic we mean O(log^k(n)), k is a constant. For communication overhead, it’s reasonable if doing batching and unbatching of a number of ciphertexts but not reasonable otherwise.

For evaluation keys, key size is huge (larger than ciphertexts that are large as well). The evaluation key size is around 160,000,000 bits. Furthermore, one needs to permanently compute on these keys. Whenever homomorphic evaluation is done, you’ll need to access the evaluation key, bring it into the CPU (a regular data bus in a regular processor will be unable to bring it), and make computations on it.

If you want to do something beyond addition and multiplication—a branch operation, for example—you have to break down this operation into a sequence of additions and multiplications. That’s pretty expensive. Imagine you have an encrypted database and an encrypted data chunk, and you want to insert this chunk into a specific position in the database. If you’re representing this operation as a circuit, the circuit will be as large as the whole database.

In the future, FHE performance is expected to be optimized both on the FHE side (new tricks discovered) and hardware side (acceleration and ASIC design). This promises to allow for more complex smart contract logics as well as more computation-intensive use cases such as AI/ML. A number of companies are working on designing and building FHE-specific FPGAs (e.g. Belfort).

“Misuse of FHE can lead to security faults.”

What can be done with FHE today:

Note: In all of these examples, we are talking about plain FHE, without any MPC or ZK superstructures handling the core FHE issues.

Whom to follow for FHE updates: Zama, Sunscreen, Zvika Brakerski, Inco, FHE Onchain.

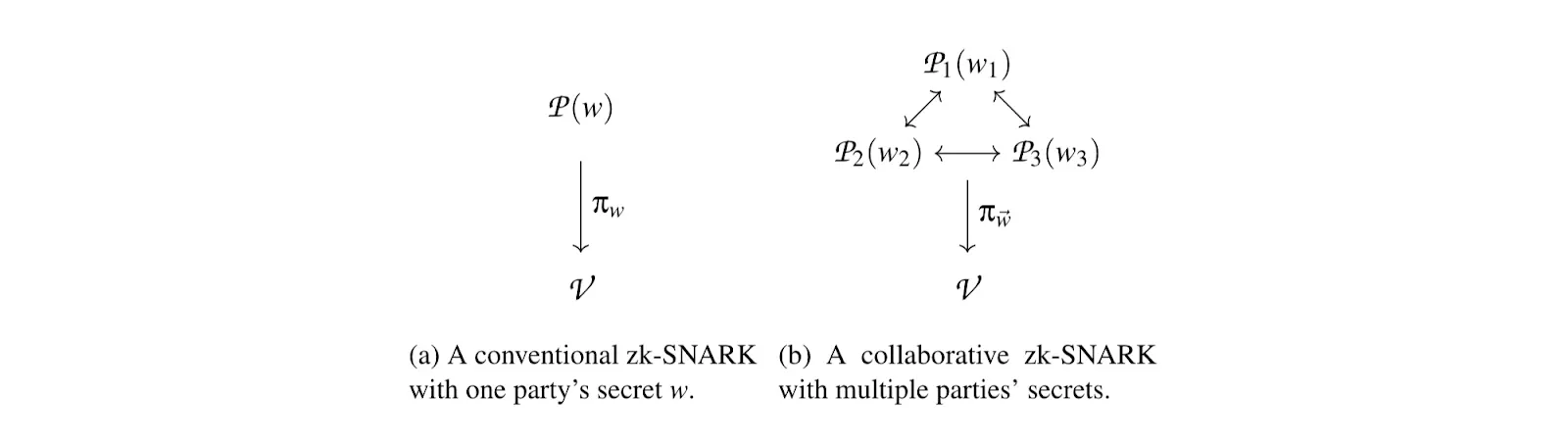

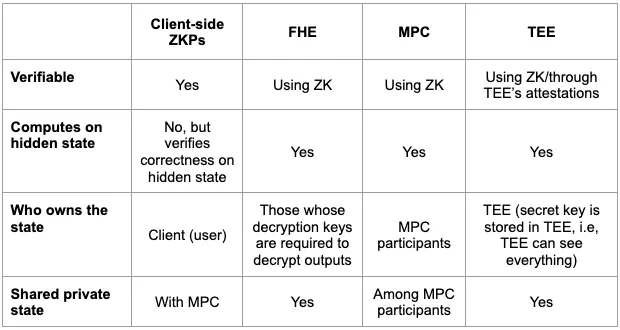

As we can see from the technology overview, these technologies are not exactly interchangeable. That said, they can complement each other. Now let’s think. Which ones should be combined, and for what reason?